A/B testing is an important part of the ecommerce conversion optimization process.

It’s the step in the process when you try to validate your test hypothesis.

Proving that your new website changes will increase your conversion rate and more importantly profits.

Sounds simple right…maybe not…

The truth is most people get A/B testing wrong.

And it doesn’t come down to poor A/B testing tools.

What it really comes down to is A/B testing best practices.

In this in-depth article you’ll learn 55 best practices to follow when split testing your website.

You’ll learn how to get your a/b split testing right and how to stop wasting your valuable time, resources and dollars on bad website testing.

Here’s what you’ll learn in each section of the article…

- Should You Be A/B Testing?

- A/B Testing Mindset

- Planning A/B Tests

- A/B Testing Setup

- Running Your A/B Tests Right

- Interpreting A/B Test Results

Let’s get started.

This is part 4/5 in a series on ecommerce conversion rate optimization.

Should You Be A/B Testing?

To kick us off let’s first clarify if you should be using A/B testing at all in your business.

1. Low Traffic & Conversion Website Don’t Need To A/B Test

If your website gets only a few visitors and your business is getting 10 sales a month, you shouldn’t be using A/B testing for conversion rate optimization.

If your tests take 6 months to run, you’re doing it wrong.

Giles’s take

“At this early stage in your business you shouldn’t use A/B testing as part of your CRO process.

Instead simply focus on customer learning, collect qualitative data from sources such as customer development interviews, make big changes to your website and business and watch your bank account.

Otherwise you’re simply wasting time and money on the wrong approach to CRO.“

The Right A/B Testing Mindset

Now we’ll learn what mindset you should have going into A/B testing to ensure you get the most value in the shortest amount of time.

2. Don’t Run A/A Tests

A/A tests are when you test two identical controls against each other and expect the same results.

Many people use A/A testing to verify they are collecting clean data and that their testing software is setup right.

Controversial I know, but according to Craig Sullivan, A/A testing is a waste of time.

The truth is, too many other factors can come into play and skew the data, quality assurance of your testing set up, not realizing the sample size needed to compare two identical creatives, the novelty effect and poor segmentation.

The focus of your testing schedule should be to reduce the resource cost to opportunity ratio, A/A testing does the opposite.

In fact, with A/A testing you use valuable testing time and learn nothing about your customer.

You’re testing for noise instead of signal.

Giles’s take

“There are too many sources of bias to use A/A testing as a method to check your testing tools are set up to collect clean data.

It may show large biases but it is not the most efficient route in revealing problems quality assurance, segmentation and Google analytics integration can.

Segment your data and cross check your results across multiple software products not just your testing tool, ensure to integrate with Google analytics and watch out for small sample sizes.“

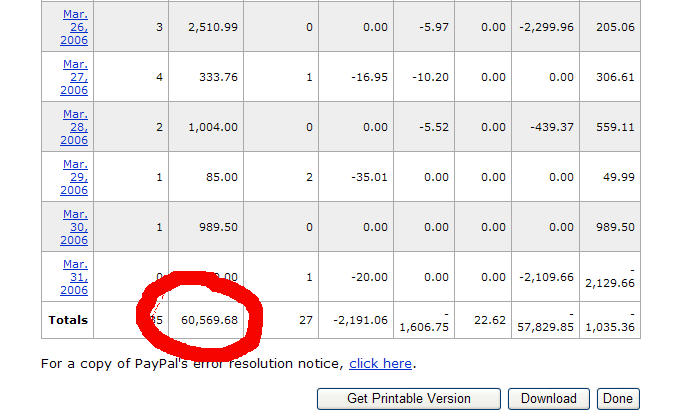

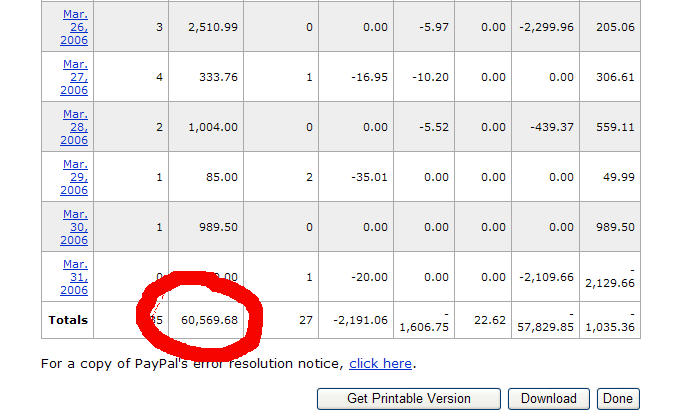

3. Tracking The Wrong Ecommerce KPI’s

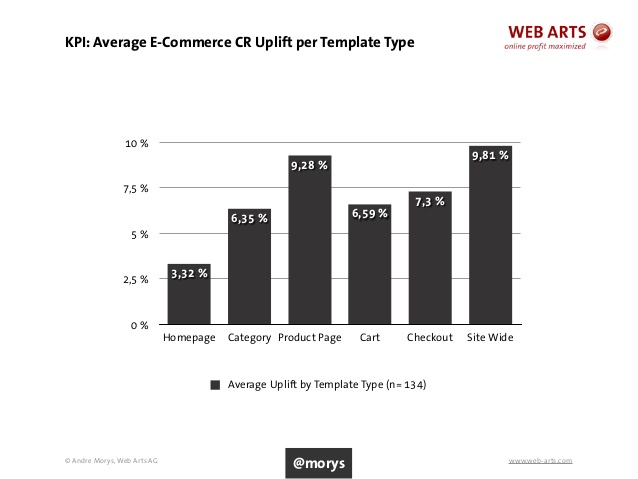

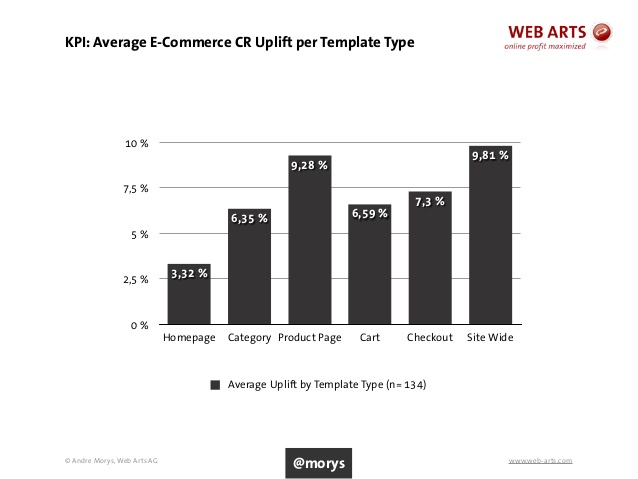

Andre Morys suggests when evaluating success in ecommerce conversion optimization focus on bottom line not conversion rate.

What does this mean?

Basically:

Bottom Line = Revenue – VAT – Returns – Cost of Goods

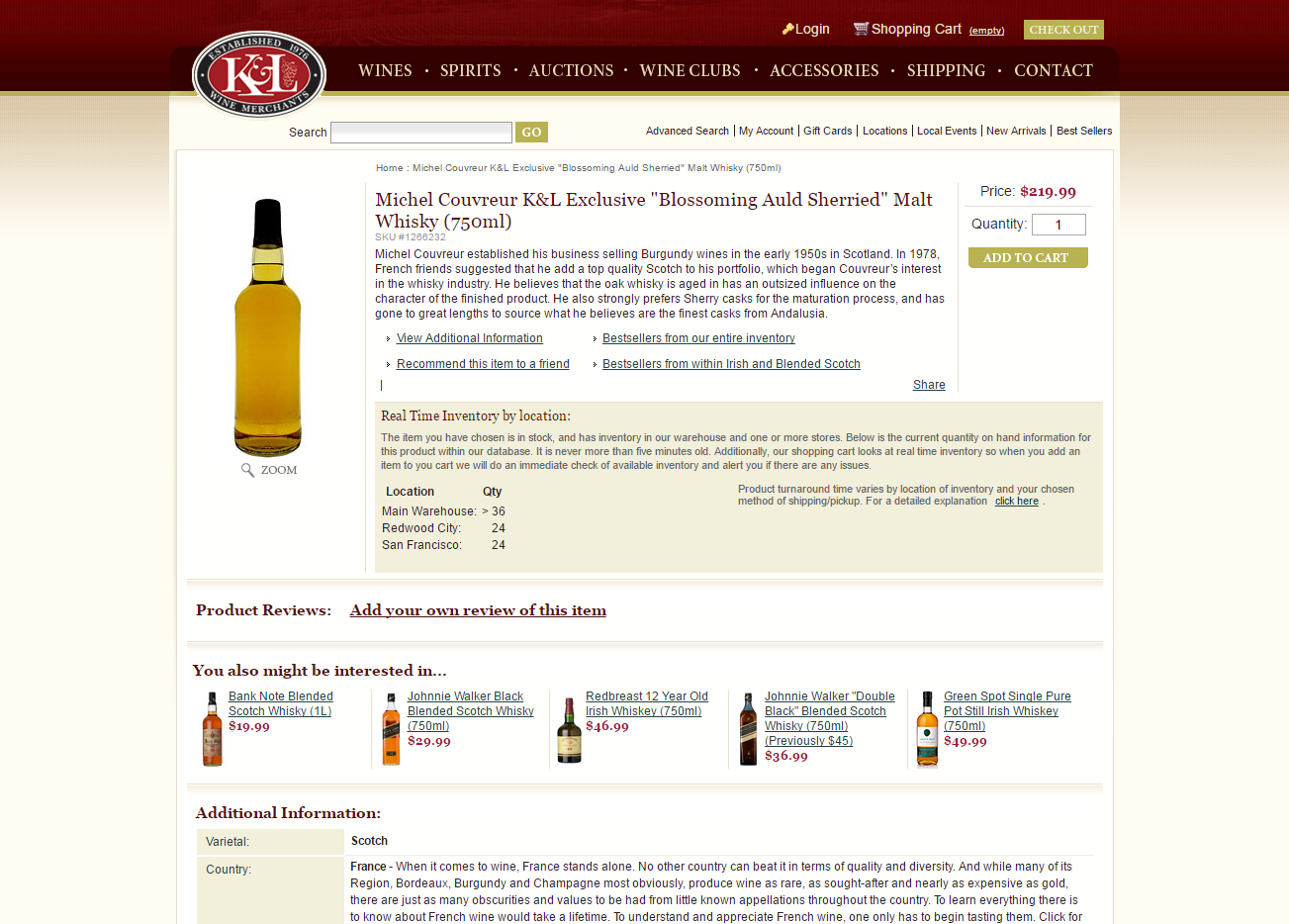

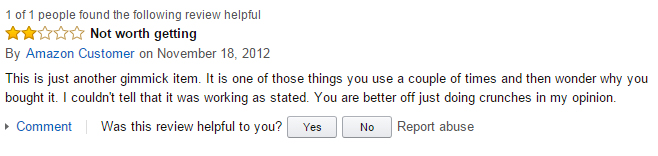

Case Study

In a case study he published, they got the following data:

Variation 1 – focus on discount: 13% uplift, -14% bottom line

Variation 2 – focus on value: 41% uplift, +22% bottom line

So variation 1 was a loosing test even though the testing tool reported a winner.

The uplift for variation 2 in conversions was much bigger than the “real uplift” in bottom line.

4. Don’t Focus On Design, Focus On Profit Optimization

You all know design execution and usability are an important part of the conversion hierarchy.

But the truth is, if your messaging is wrong, it doesn’t matter how you dress it up.

It just won’t resonate with your target customer.

Don’t focus too much time and resources on design in time limited projects, copy changes and more specifically value proposition changes can be very powerful and are much quicker and cheaper to execute.

Giles’s take

“Of course there are no hard and fast rules. In some tests design will prove to be more important that copy changes.

However, many of us don’t give copy it’s due credit, Alhan Keser of Widerfunnel.com used to make this mistake too:

“I used to make the mistake of focusing entirely on design/usability-related tests. I didn’t give copy and its effect on user motivation enough credit.”

Make sure to focus on copy and value proposition changes in tests if your budget is low or testing time short.“

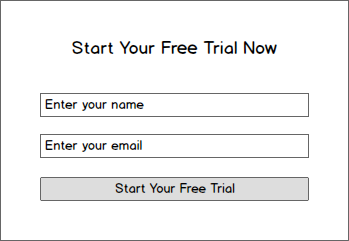

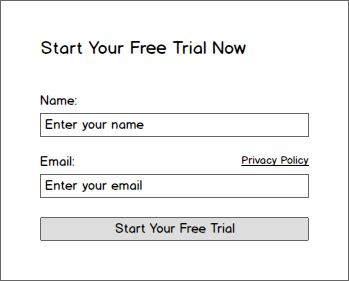

5. Be Creative With Execution For Better Tests Results

Sujan Patel suggests that your mindset and approach to testing can be as important as technical best practices.

For example he says:

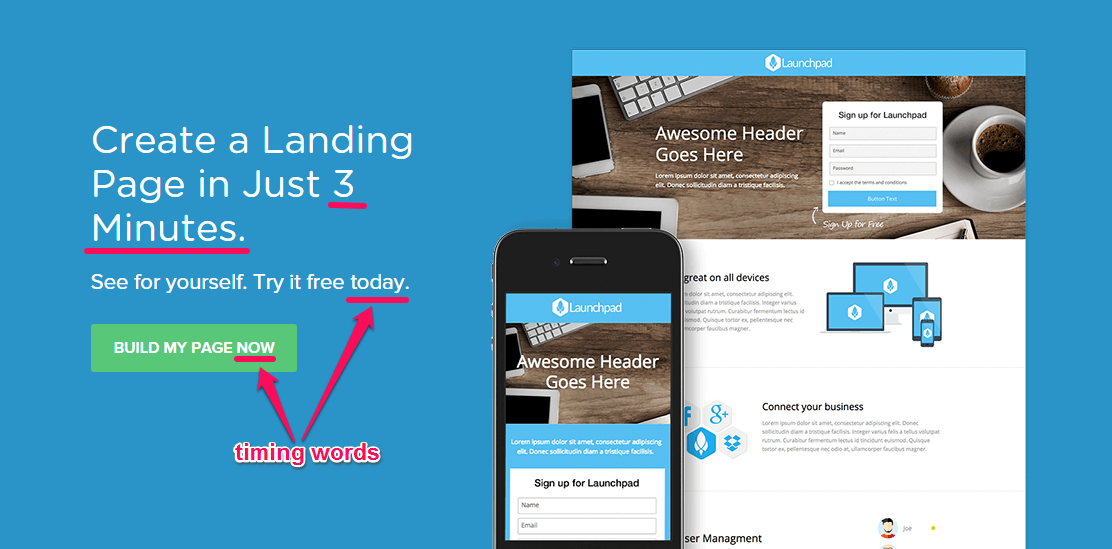

“One of the easiest ways to drive more conversions on your page is by testing more personalized CTA language against the standard (and boring) CTA language that you’re used to seeing everywhere. For example, in my ebook 100 Days of Growth, I reference the fact that Uber uses the text “Become a Driver” in their CTA button, rather than “sign up” or “join now.” This strategy is more personal, more direct, and has the potential to be a lot more effective.”

As Sujan points out here, the CTA is more personal and actually focuses on the outcome of clicking the CTA not the action itself.

Be creative with your copy, design and development execution. Don’t just test the status quo, challenge it.

6. Don’t Condition The Experiment With Customer Preconceptions

It’s hard to ignore your preconceptions about the customer when testing, but if you don’t take an unbiased approach to testing you can condition the experiment.

Alfonso Prim from Innokabi.com advises:

“The main thing in A/B testing for me is not a technical issue. It is a concept issue: Forget the image of the customer that you have in your brain before the experiment, and don’t try to discern the results… because this can condition the experiment.”.

7. Disregarding Test Results

It’s hard to believe, but even after all the effort it takes to get A/B testing right, some people ignore the results.

They’ll get a conversion change that they didn’t expect and go with their opinion or gut feeling anyway.

Giles’s take

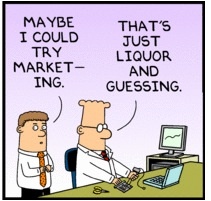

“The whole point of data driven marketing is validate assumptions.

Don’t be bone headed, if a test doesn’t go your way, listen to the data.

Sure be diligent and cross check it in any way you can, but please don’t simply disregard test results you don’t like.“

8. Always Test Every Website Change

Testing is a state of mind, and it requires commitment.

Everything you change on your site can impact your business.

It is only the reckless that push changes live untested.

Sure, I advocate big tests and big changes will bring you bigger lifts.

But that is not to say simply changing an image won’t affect your conversion rate and profits too, depending on who you are.

It very well might!

Giles’s take

“A/B testing is like your final hand at the poker table, you’re all in or out.

Go all in on testing, and test everything.

Guessing is an irrational behavior, is that the best way to sustainably grow your business?“

9. Testing Low Traffic / Low Conversion Sites

Many of you believe the only businesses you can really optimize are high traffic or high conversion volume businesses.

In fact, you don’t need traffic or conversions at scale to be a website optimizer.

The truth is, we can take practices from lean startup methodology and user experience designer to test low traffic and low conversion businesses early on and optimize them just the same.

Giles’s take

“We can employ qualitative data collection and analysis methodologies like those found in early stage startups looking for product/market fit to improve businesses with little to no traffic or low conversions.

Created by Steve Blank and popularized by the lean startup movement, customer development is a great source of qualitative data for your business.

Some other examples of qualitative data to analyse for website changes are:

- Customer survey

- Exit intent polls

- Talking with customer services or sales team members

- User Testing

- Usability Evaluations

Instead of running tests to find significance, you focus on big changes that test your business model and product/market fit and watch your bank account.

While for more mature businesses this would be guess work and not advised, for small early stage companies this can be the best road to find product/market fit and success down the line.“

10. Don’t Give Up After One Failed Test

Honestly, most initial testing fails.

Sometimes it can take a half dozen tests to see a conversion and profit lift.

Don’t test one page, fail, then move onto the next area of testing.

Giles’s take

“The most important thing, especially as an agency is to communicate to the client that tests often fail.

As long as you set expectations correctly either in-house to management or to your clients, then failing tests won’t be a problem.

Stick with testing and fix the biggest money leaks first, this will take more than one A/B test to get right.“

11. Don’t Come Up With Test Ideas, Let The Data Talk

The first step in A/B testing is not coming up with a test idea.

Because every time you personally have a test hypothesis come to mind, you’re actually just remembering something you’ve seen elsewhere.

That’s not to say you or members of your company can’t come up with things worth testing from your experience working in the business.

But make sure to validate these ideas by collecting and analyzing data before testing.

Giles’s take

“Every A/B test should come from data collected and analysed from your business.

Use quantitative data sources to learn where the money is being wasted in your business, and qualitative data sources to learn why.

If an A/B test idea didn’t come from data from your business, throw it away. It’s garbage.“

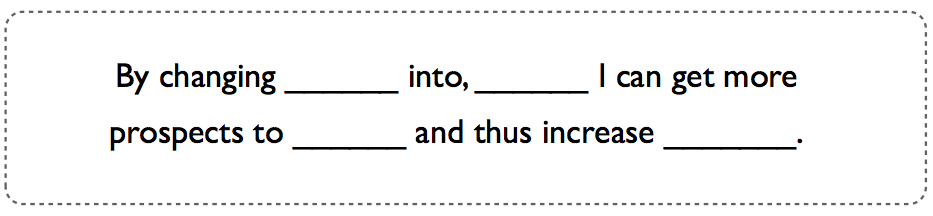

12. Tests Are Not Based On A Hypothesis

As well as ensuring test ideas do come from data you’ve collected and analysed from your business, ensure they also use a hypothesis.

You need a hypothesis to ensure your tests have a clear focus and goal.

They also help to improve communication within teams and they help you to iterate on your customer theory.

Ensure your have a strong hypothesis that aims to validate something about your customer or product/market fit.

The hypothesis should come from an insight you’ve gathered through data collection and analysis.

Giles’s take

“When writing your hypothesis you simply need to understand.

What lesson you learned from data collection and analysis

What the potential solution is

Which conversion rate you are trying to improve

What business objective this goal is linked to

What dollar amount your are trying to improve within what business cycle

Every hypothesis should aim to give you a better and deeper understanding of your customer.

Use this framework by Michael Aagaard as a guide to writing your hypotheses:

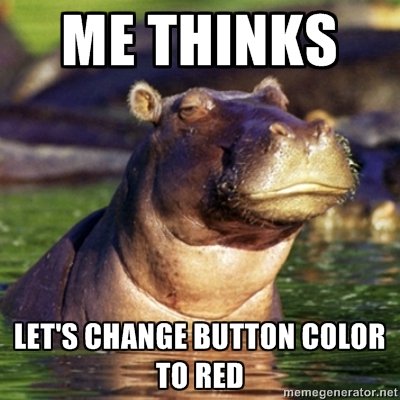

13. Don’t Listen To The Hippo

Just because someone gets paid more than you, doesn’t mean they understand the customer or the business better.

It especially does not mean that they should run A/B tests based on opinion or using a consultancy approach.

Giles’s take

“A/B tests ideas should come from data collection and analysis, not HIPPO’s or managerial roles without data driven marketing experience.“

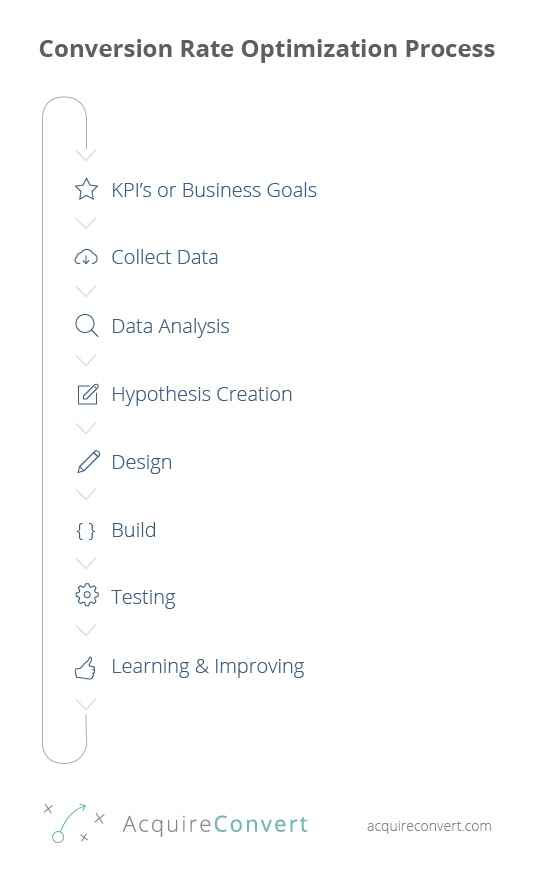

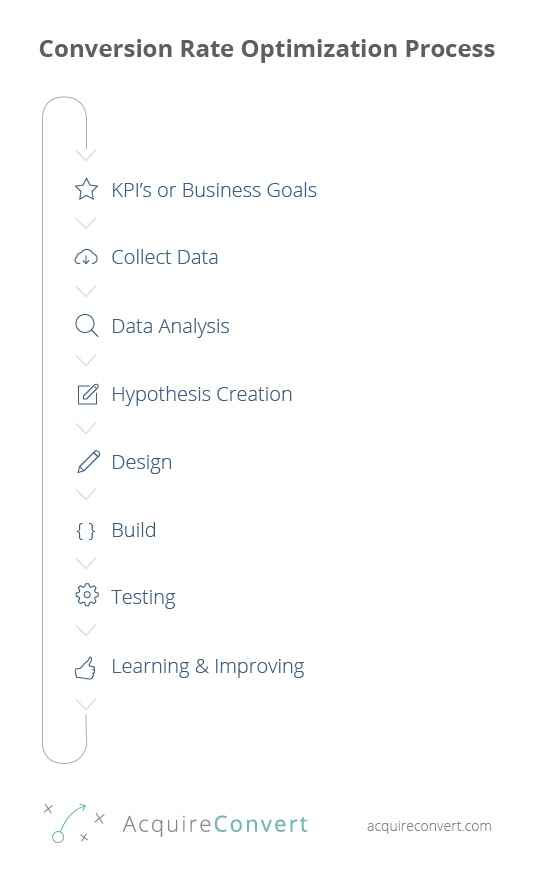

14. Not Having A Process

This is a classic and something I see again and again.

Doing any kind of CRO without a proven process is just plain crazy.

Giles’s take

“You can iterate on a process, it can be improved every time you cycle through it.

A process helps teams communicate and stops marketers just throwing stuff at the wall and seeing what sticks.

A process helps you to stay focused and data-driven and means your A/B tests are backed by business objectives, hypotheses and customer learning at heart.“

15. Thinking A Losing Test Doesn’t Allow For Learning And Growth

Many people focus on creating winning A/B tests.

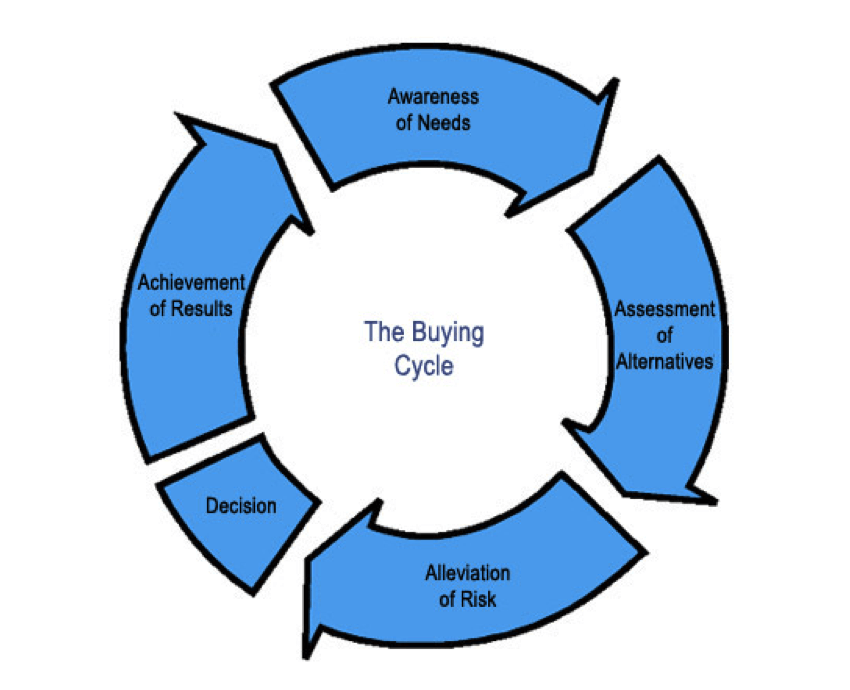

The truth is, A/B testing is not about winning or loosing.

It’s actually about customer understanding.

Giles’s take

“If you write a great test hypothesis, and plan your test well, you should still validate or invalidate a customer learning from the test, whether you win or lose.

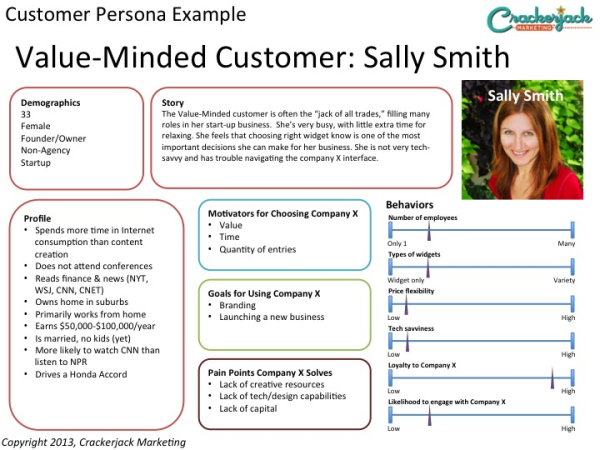

Don’t focus on test wins, focus on customer learning and persona validation.“

16. Always Be Testing

Any day that passes you by without testing is a waste of traffic and customer data.

Testing allows you to learn more about your visitors and customers, you get to know their desires and pain points inside out.

The best way to get conversion rate lifts is through customer understanding.

So use any time you have to learn more and focus in on your exact customer personas and differentiator.

Giles’s take

“Insights from tests can be used throughout your business.

The more you know about your customer the better your user experience becomes.

By user experience I mean any touch point the user has with your brand.

Your PPC campaigns, content marketing, social messaging.

The more laser focussed you are on developing understanding of your customer personas, the better your chances of higher conversions and increased profits.“

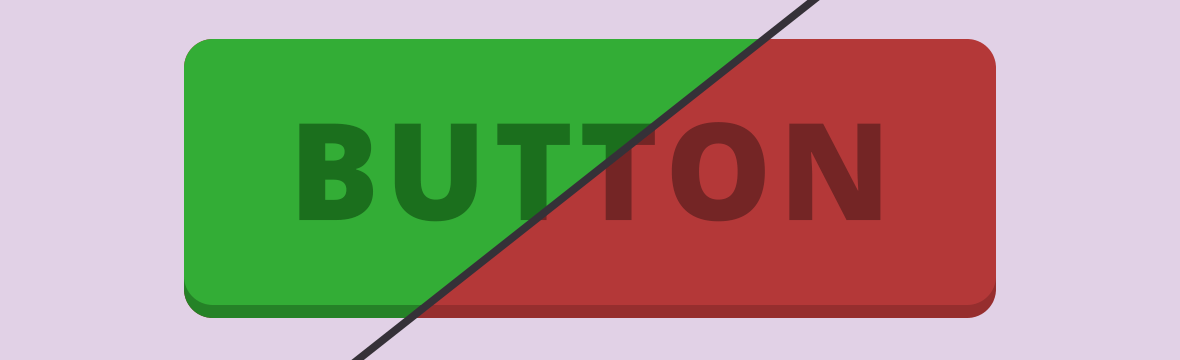

17. Don’t Waste Time & Money On Stupid Tests (Like Button Color)

How many case studies have you seen about button color or copy.

Too many.

How many of these studies tell you the bigger picture. Few to none.

That’s because businesses don’t change overnight due to a button color change.

Giles’s take

“Don’t waste time early in your A/B testing schedule on stupid small tests.

Leave this kind of testing for multivariate tests after A/B testing has proved successful.

If you must take inspiration from inflamatory case studies, dig deeper into what is really happening behind the scenes.

For example: For button color tests, if you cross reference a lot of tests, you’ll find most of the tests were actually affected by visual hierarchy or button color contrast.

Learn the principles of conversion design and conversion copywriting to help you see through stupid test case studies.“

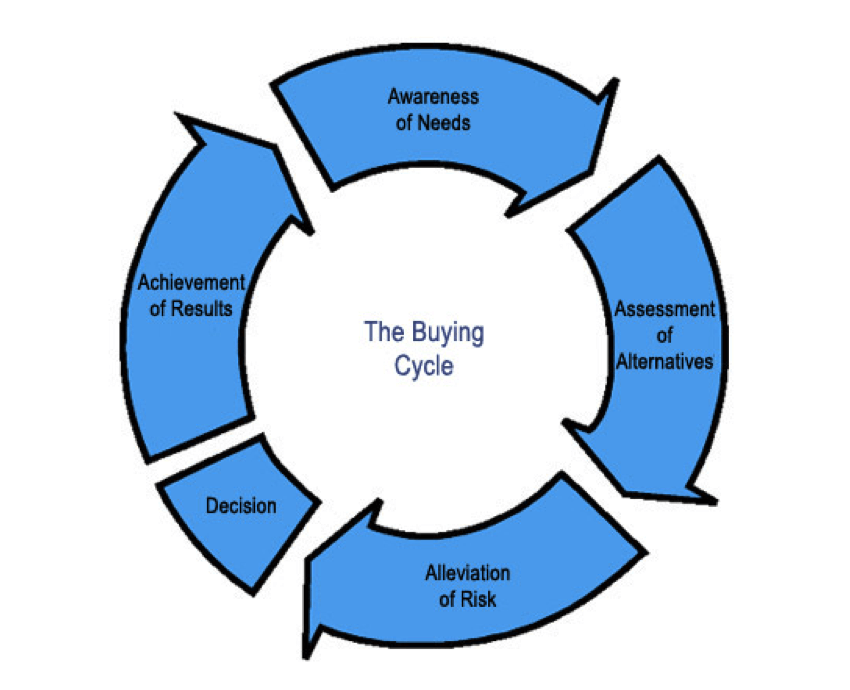

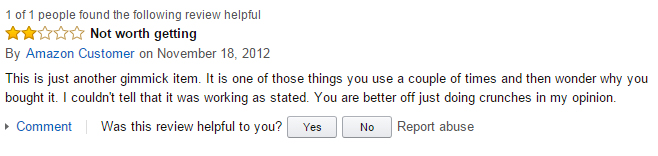

18. Optimize For Lifelong Customers

We all know that when it comes to growing your business, it’s generally easier and cheaper to first look to your existing customers.

Word of mouth, customer referrals and repeat business.

Lifelong, loyal customers that are personally aligned with your brand’s value proposition are exactly what you want more of.

However, when it comes to conversion optimization people tend to forget this adage.

I constantly see people optimizing for the top of their sales funnel.

More easy leads and more cheap sales.

But if you optimize the top of your funnel at the expense of the quality of your customer or worse at the expense of your customers satisfaction, you are in trouble.

Those customers will end up refunding, complaining and they won’t turn into repeat business.

In fact they could end up costing you more than they make you, in customer service overheads and negative word of mouth.

If you optimize for the top of your funnel rather than the back end you will make your business feel like a tv infomercial!

And we know from online reviews that these businesses do not continue to sustainably grow!

Giles’s take

“Focus on getting your value proposition right, make sure your unique value is communicated to your target audience. Even at the expense of conversion rate.

People who are aligned with and love your product or service will help grow your business much more than dissatisfied customers and negative word of mouth and reviews.“

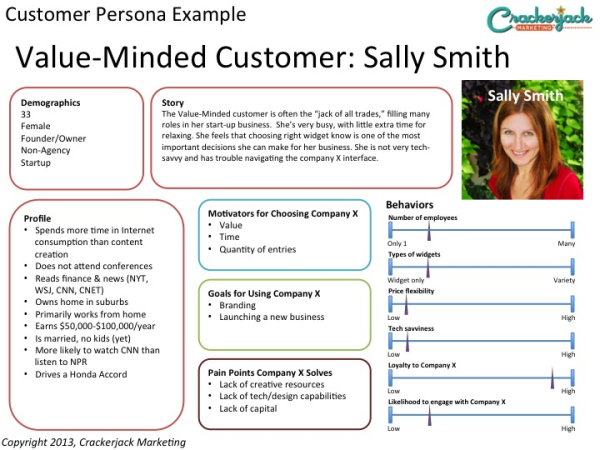

19. Create A Testing Plan That Has Customer Understanding At It’s Core

So if you’re not optimizing for the front of the funnel, what are you optimizing for?

Optimize for your value proposition and for your customer theory, what you think you know about your customer and their one true goal or one big pain point.

The one desire they have they your product uniquely enables or their one big pain point your product solves.

Giles’s take

“Use qualitative data collection and analysis as well as quantitative data to inform your test hypothesis.

Focus on creating tests that aim to not only increase conversion rate but solidify or validate customer assumptions.“

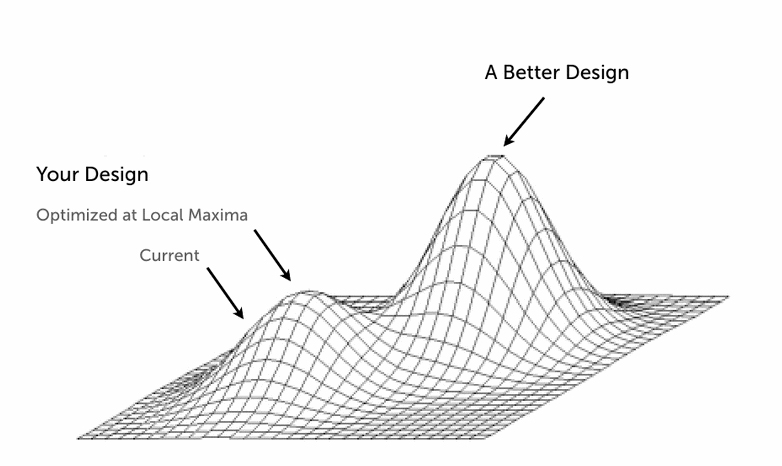

20. Run Macro-Focused Tests

It’s really easy to read a case study online, copy it’s results on your website and try to get a conversion lift.

Like, change some button copy from ‘your’ to ‘my’.

These kinds of tests rarely result in sustainable improvements or large conversion shifts.

Giles’s take

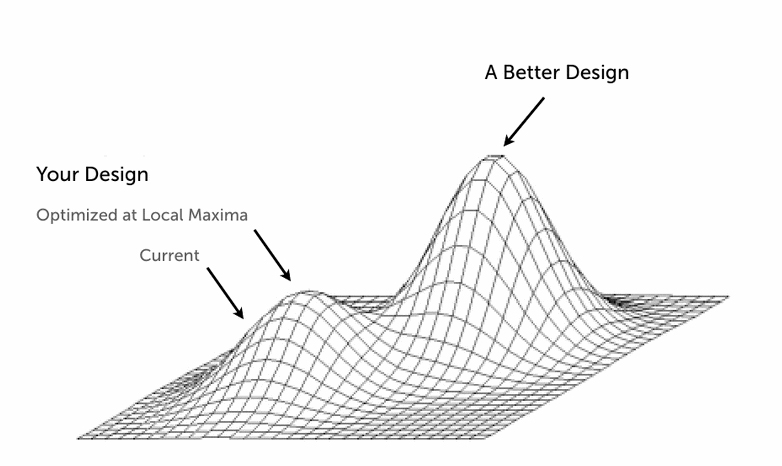

“If you run micro-tests you limit your optimization to a local maxima.

With a macro focus on testing you can reach a new maxima outside your current vicinity.

Big changes and tests lead to bigger conversions lifts, in the worst case scenario, to bigger customer learnings.

Test something big, your business model or even your product offering.

Smartshoot focused on macro testing and optimized their pricing and products page by offering products that didn’t even exist yet.

By running big tests that focused on customer understanding they learnt what features their customers really cared about and increased conversions by 233%.“

21. Use A/B Testing As Part Of A Complete Conversion Optimization Process

As powerful as A/B testing can be, it’s not a magical solution for your business.

Often the problem lies deeper than simply getting more sales.

Sometimes what you are offering is just not what people want or need, there is no product/market fit.

22. Don’t Copy Your Competitors

We all know how easy it is to be read a case study and get carried away, we see big percentage changes in conversion rate and our eyes roll over into dollar signs.

Ching!

However, just because something worked for someone else doesn’t mean it will work for you.

Best practices and conventions can guide us but in CRO there are no hard and fast rules.

Don’t randomly copy tests you’ve found in case studies and stop copying your competitors: they don’t know what they’re doing either.

Giles’s take

“Instead of copying case studies, collect and analyse qualitative and quantitative data to generate test hypotheses.

Run tests based on data from your business and from your customer.“

Best Practices For Planning Your A/B Tests

In the next section you’ll learn some best practices to follow when planning your A/B tests.

23. Prioritize Your A/B Tests

So you’ve collected and analysed qualitative and quantitative data and have come up with test hypotheses that not only aim to solve a problem but deepen your customer understanding.

What could possibly go wrong!?

Well…you could run the wrong test first.

Prioritization of tests is another best practice most skip over.

Make sure to run the tests that take the least amount of time, are the cheapest to execute and have the biggest business impact first!

Giles’s take

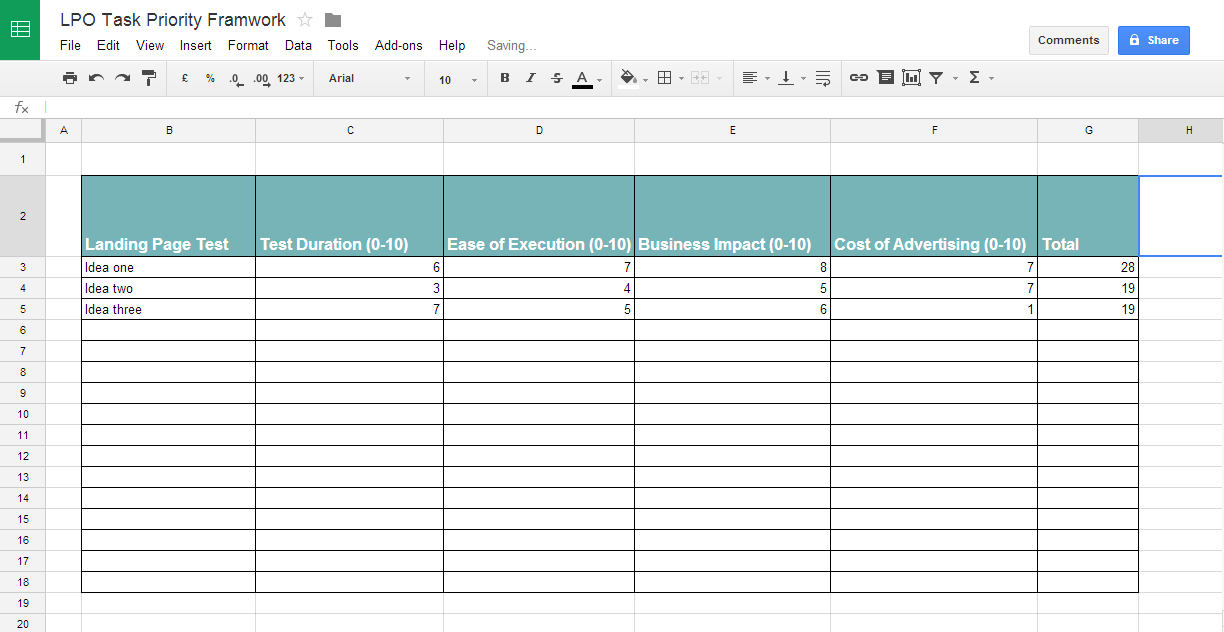

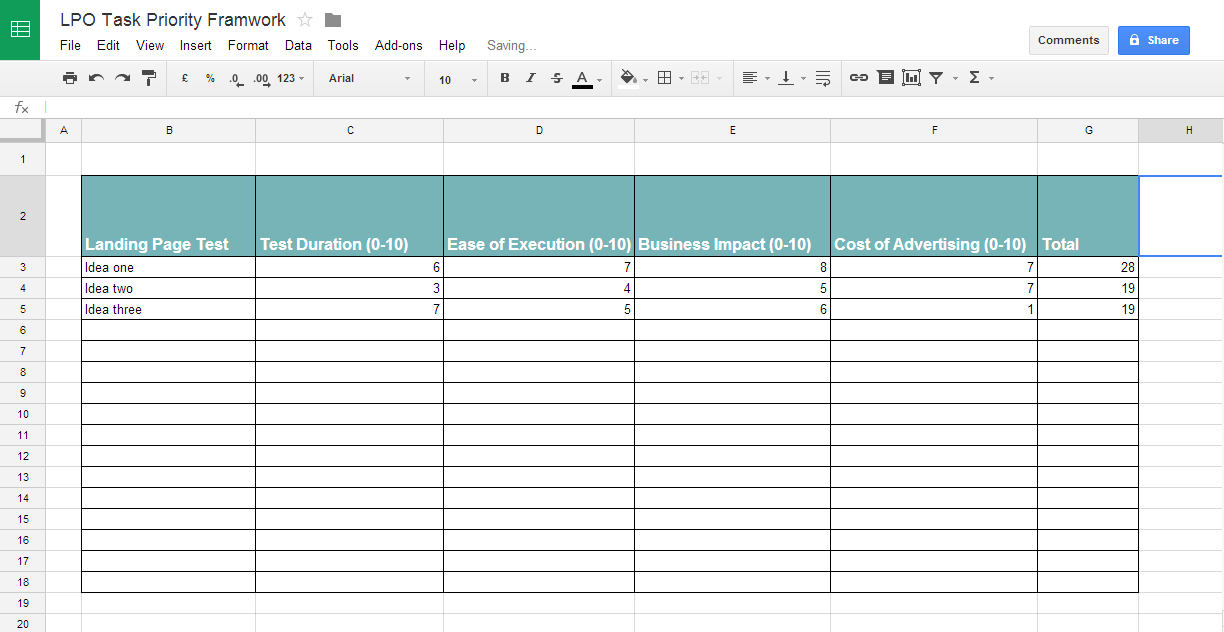

“When prioritizing your A/B tests consider these four factors and score each hypothesis out of ten, then execute the highest scoring test first:

Time – The test duration

How long it takes for the test to reach statistical significance, shorter tests score higher.

Ease – How easy it is to execute the test

The easier and cheaper a test is to implement the higher it should score.

Business Impact

How much will this test will change the business. The business! Not the conversion rate, not the revenue but the profit, the business. Big changes score high.

Cost of Advertising

How much will it cost to drive traffic to this page. If it is all organic traffic then a higher score is more appropriate, if it is expensive high competition CPC keyword traffic score it lower.

Now you have a framework to rank and prioritize your test hypothesis.“

24. Testing Templates Not Individual Pages

One common problem with testing, especially for ecommerce websites is that they only have a small number of page templates.

But they have potentially hundreds of pages based on those templates.

Product pages, category pages etc.

So to leverage the traffic and conversion volume of all those visitors and sales, test on a template level.

Giles’s take

“Of course this comes down to what your testing and why, but leveraging all product page traffic to test a new variation is a smart move.

The test will be quicker and cheaper to run and the results will have a larger sample size and therefore more statistical significance.“

25. Talk With The Developers While Planning Tests

It’s all well and good toeing the line of best practice and getting all your technical implementation correct.

But if you don’t talk with the website development team and discuss how your plan fits into their normal deployment schedule, you could be in for some trouble.

Overlapping your testing schedule with changes to the website would be a recipe for disaster.

Giles’s take

“Make sure you align your conversion optimization efforts with the design, user experience and development processes already in place.

As CRO becomes more prevalent throughout online business we need to learn how to communicate and collaborate between UX, CRO, analytics, design and product more closely.

Combining company wide build, measure & learn processes and iteration cycles.“

26. Not Engaging The Whole Team For Tests Ideas

Even though your tests ideas and hypotheses should come from data collection and analysis.

There is nothing stopping anyone in the business having input into testing schedules.

Giles’s take

“The truth is your staff are in the trenches, they speak to the customer everyday (you should too).

Which means, especially if they work in customer services, they have a good idea of who your business caters for.

Get input and be open to test ideas from all team members, but just make sure they can back it up with qualitative data from a number of customers.

You want to see patterns, not one of extreme cases of anger or delight, but repeating customer feedback that points to test ideas from their department.“

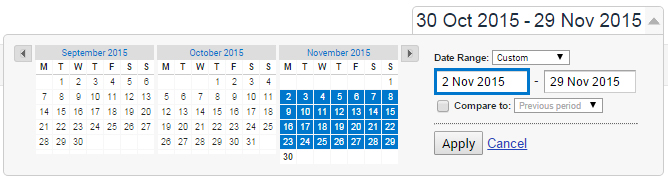

27. Run Tests For Full Weeks & Months

Let’s say you’ve cracked the code on organic traffic and you get a bunch of daily uniques and sales. We’re talking 750+ sales a day.

Perfect right?!

You can A/B test till your hearts content and run whole tests in a single day!

Wrong.

You need to run your tests for full weeks (monday to sunday), if not full months.

Better yet run them for a full business cycle and then look at the bank statements.

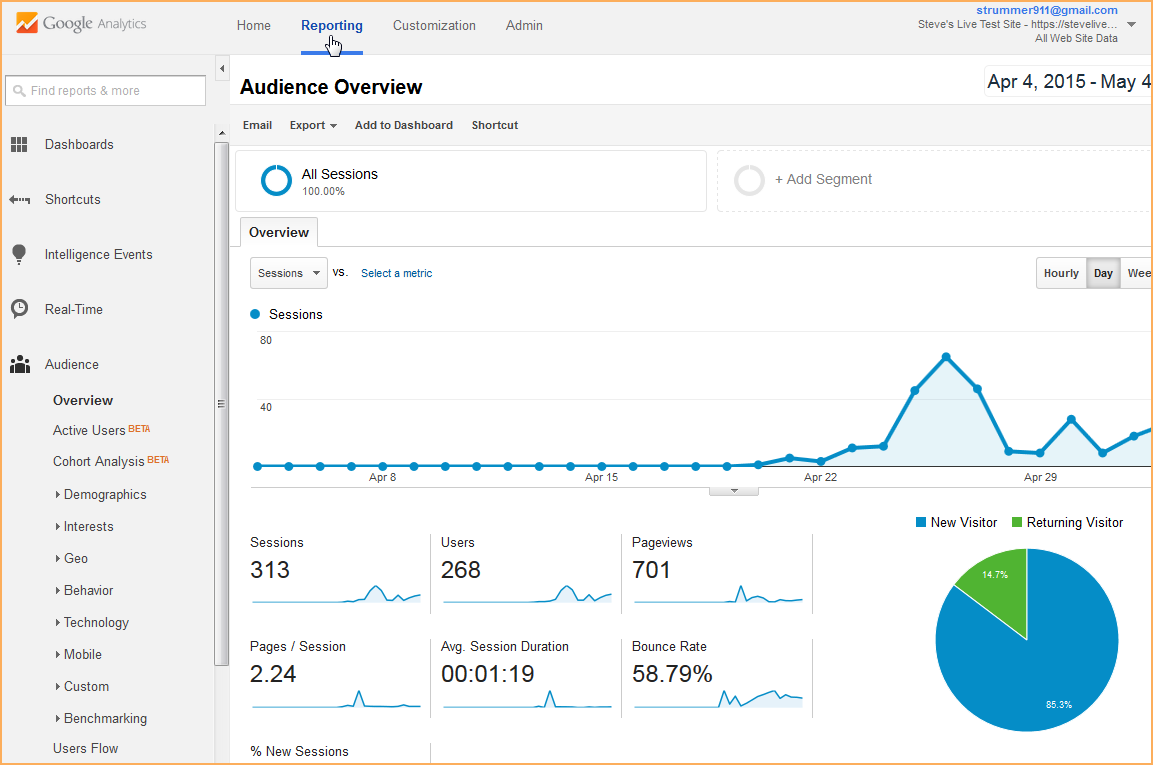

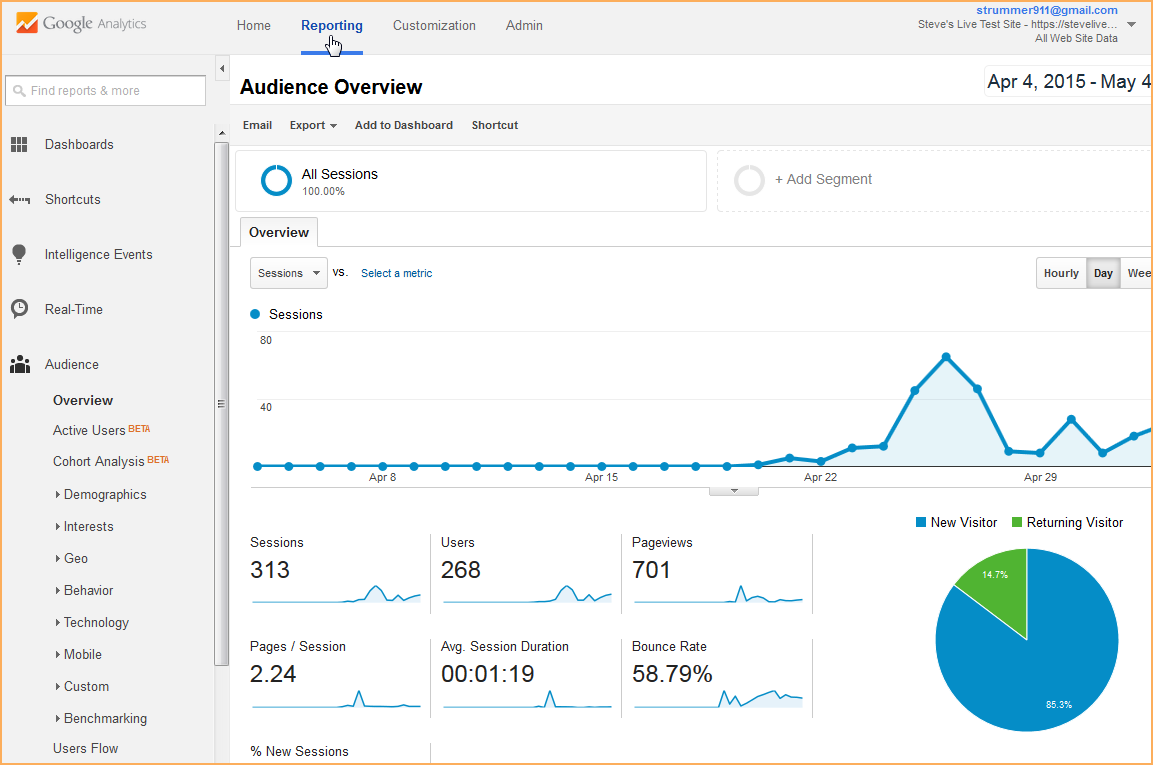

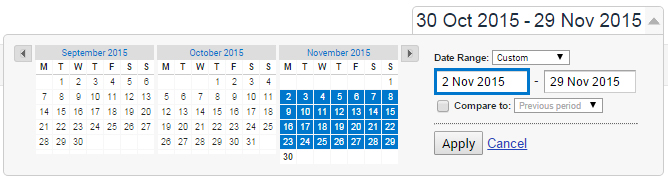

Just look at your conversions by days of the week report in Google Analytics and you’ll understand.

Joe from Ispionage.com suggets:

“One of the best practices I’ve learned is that you need to let tests run for at least a week and possibly a month because I’ve seen way too many tests where one variation jumps out early and reaches statistical significance only to come back down to earth and score the same conversion rate as the control a week later. With that said, if you don’t have enough traffic you can sometimes pick winners sooner, but when possible, it’s better to let the test run longer so you can make sure the results hold true over time and aren’t merely a fluke.”

Giles’s take

“Conversion rate can fluctuate by day of the week, when it’s the holidays or even because of weather.

You need a representative data sample for your data to be valid, so don’t run tests in a single day.“

28. Consider Purchase Cycle In Your Sample Size

Understanding how long your typical purchase cycle is can make or break your A/B testing efforts.

If you run a test for only two weeks (monday to sunday of course) when your cycle is more like a month then you’ll end up cutting off a lot of visitors from the experiment.

Giles’s take

“The problem with stopping a test before the end of your purchase cycle is you may end up with skewed results.

You end up capturing data only for what I call ‘Early Converters’ and loose data for visitors who would convert later in the cycle.

You’re better off leaving an experiment running in the background once you’ve closed it to new visitors.

With this approach people who are still in their purchase cycle will continue to see the test and become ‘Late Converters’.

You increase your test sample size, which is hardly a bad thing and push ‘Late Converters’ through the test without showing it to new unique visitors.“

29. Consider Visitor Device Types When A/B Testing

Imagine you’re an ecommerce entrepreneur and you mobile optimize your online store.

You run an A/B test, a site wide comparison of the original design vs the new responsive design.

Oh dear, conversion rate drops by 5%.

Do you stick with the control?

When you look again closely you realize, the visitors device type was not considered when preparing the test.

So it is possible that the majority of mobile visitors saw the old unresponsive site.

Giles’s take

“A better approach would have been to evenly split the mobile and desktop traffic between the two variations.

You could then understand how the responsive design individually affects desktop and mobile visitors.

Make sure to understand and consider how device type affects your A/B testing results.“

30. Prioritize Tests With The PIE Framework

Use your business resources to plan and measure marketing initiatives well.

Lorenzo Grandi, marketing manager at pr.co suggests:

“Prioritise your tests with the PIE framework.

The time you spend to prepare your tests is well spent. Since you’re going to run a lot of tests, and are aiming at making a difference, you’ll need to decide how to prioritise your tests.

A good way to do it is through the PIE framework: put all your test ideas in a spreadsheet, and rate (1-10) each of them for these parameters: Potential, Importance and Ease. Calculate the average, which is the ultimate value of your test idea. You can order your spreadsheet by this value to have your priority list ready.

In this way, you’ll be able to consider an objective ranking to decide which tests to start next.”

Check out this case study recommended by Lorenzo for more information.

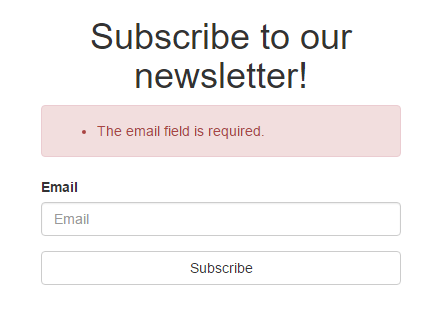

31. Set Goals For Your Tests

Sean Si, founder of SEO Hacker suggests:

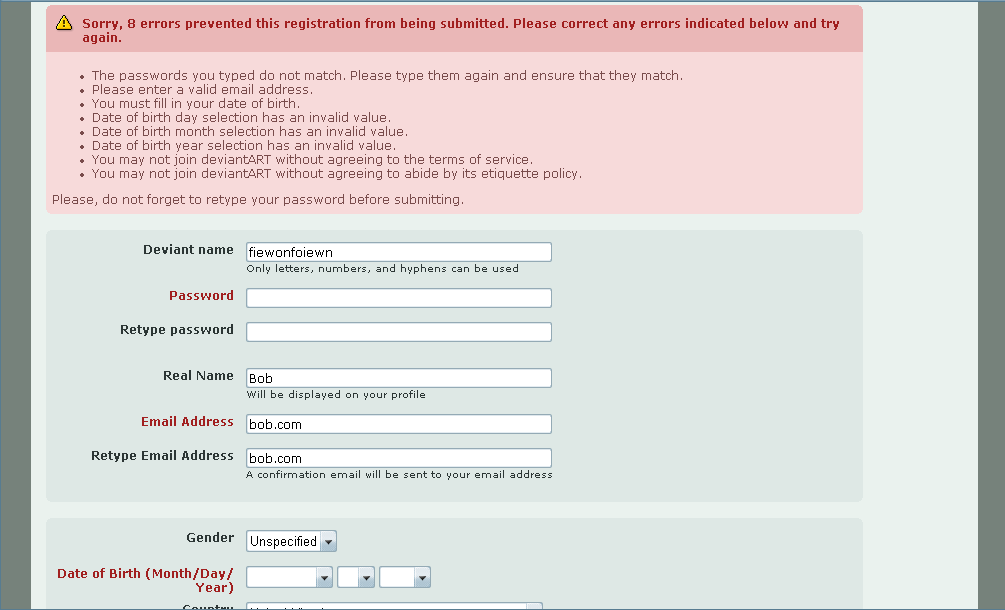

“One of the biggest A/B testing mistakes a person can make is to start testing without setting the goal properly.

For example, when A/B testing our homepage at Qeryz, our goal was to increase freemium signups. The problem is, we weren’t able to fully qualify the signups because the Original version of the homepage didn’t have a signup form in it while the Variation version did. The goal count was set for people who went to our signup page and then entered onboarding. This was a major mistake because people who were seeing the variation version did not need to go to our signup page because there was a signup form right there in the homepage!

Because of the mistake in goal setting, the data in that A/B test was severely skewed. We had to redo it and it took another 2 weeks for data gathering – which sucked.”

32. Understand Your Customers Weekly & Hourly Behaviors

Even though we test for full weeks, it’s still important to understand how buying habits and customer behaviors change hour to hour and day to day.

Jerry from Webhostingsecretrevealed.com suggests:

“Do time/day-based split-tests – Visitors shift their focus throughout the day and week (ie. weekday vs weekend; morning vs night; hence react to web content (sales copy, blog content, etc) very differently. So to give the biggest bang for my buck, I ought to determine the best day/time and max out my advertising effort on those good days.”

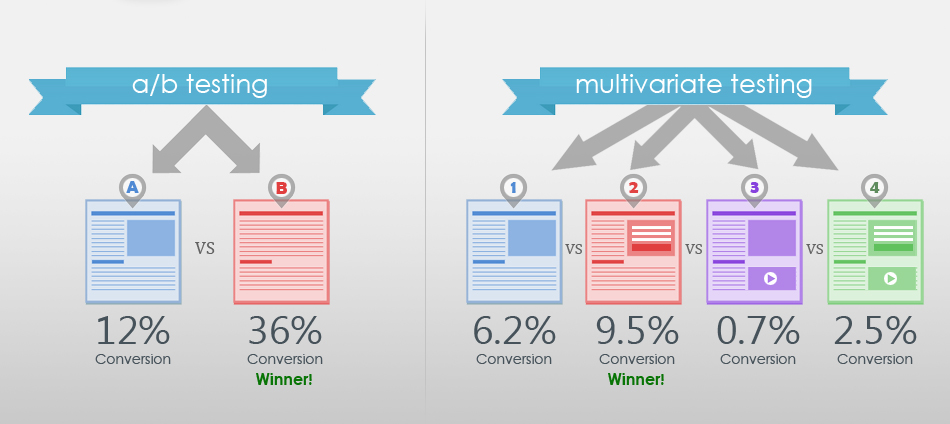

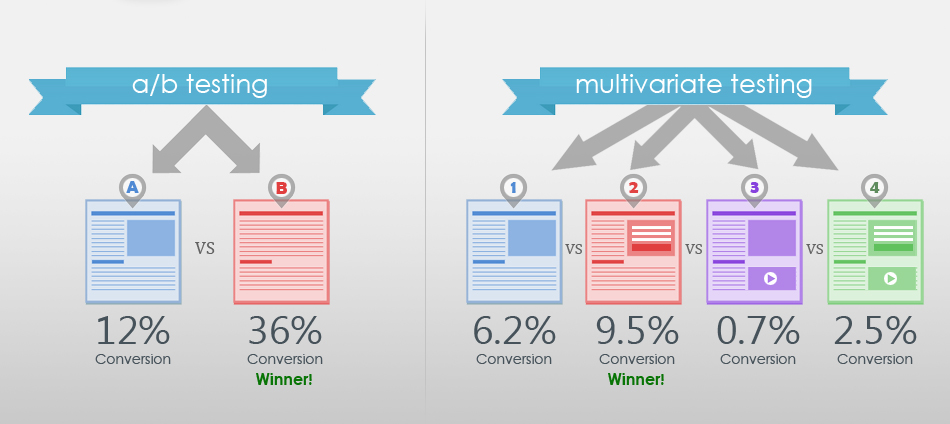

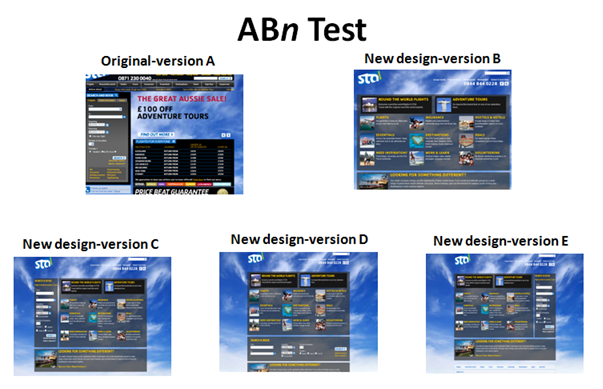

33. Running A/B Tests After Large Changes When You Should Run Multivariate Tests

Even though I am a big advocate of running big tests and going after big changes in the business.

There is a time and place where multivariate testing just makes more sense.

This is normally after you have run an A/B test and seen a big lift, and now want to optimize more nuanced parts of the page.

Giles’s take

“It can be valuable to run multivariate tests after getting a big win with A/B testing.

However, the truth is, multivariate testing requires a lot more traffic to get statically significant results.

For every test variation you will require at least another 250-350 conversions.

Multivariate tests do something A/B tests cannot, they help you to learn how users behave and interact with single elements and variables of those elements in terms of their design.

You can determine the impact individual elements on a page have in terms of conversion rate.

As a rule of thumb you should be running 9 A/B tests for every one Multivariate test.“

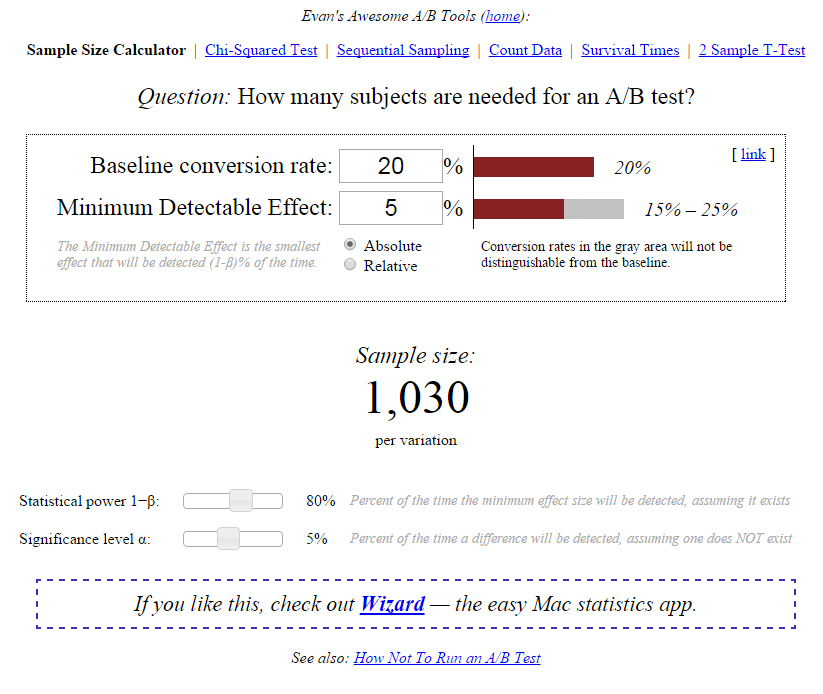

34. Not Calculating The Sample Size Before The Test

The most important thing to remember from these best practices is that significance is not a stopping factor, sample size is.

Statistical significance does not tell us the probability that B is better than A. It also doesn’t tell us the probability that we will make a mistake in selecting B over A.

These are both common misconceptions.

Giles’s take

“Testing without measuring statistical significance is just nuts.

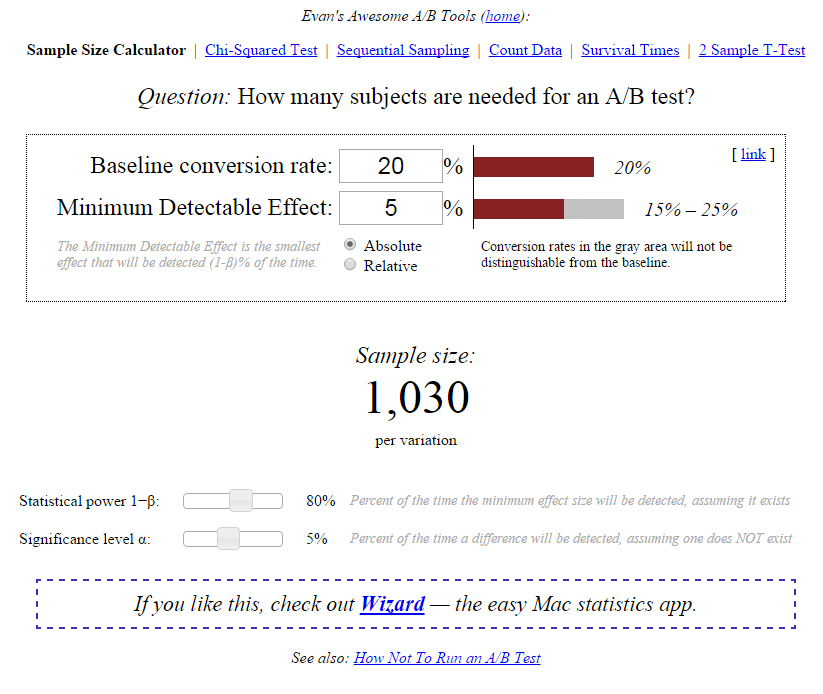

Always calculate the required sample size before you run your test.

Good test planning as highlighted in this section is key.

Use a sample size calculator, I recommend this one from Evan Miller.“

35. Running Multiple Tests At The Same Time With Overlapping Traffic

It may seem like you are saving time by running multiple tests at the same time.

But this can often lead to skewed data.

If you choose to run A/B tests with overlapping traffic, you must ensure that traffic is evenly distributed.

If you test a single product webpage, A vs B, and for example your checkout page too, C vs D.

Be careful to ensure that traffic from B is split 50/50 between C and D (e.g. instead of 30/70 for example).

Giles’s take

“If you want to test new variations of several pages in the same flow at once you are better off using multi-page testing.

Just ensure you get attribution right.“

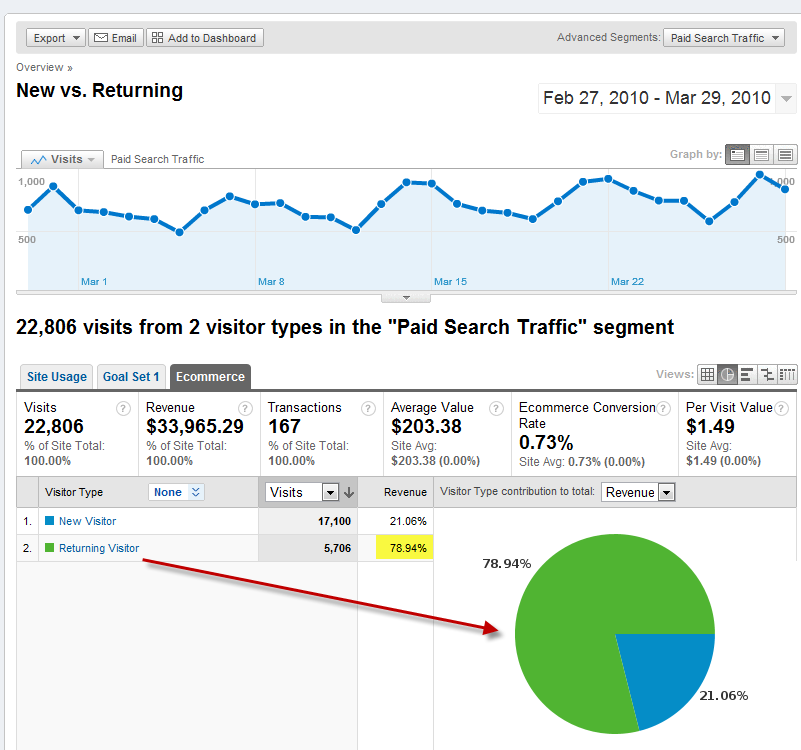

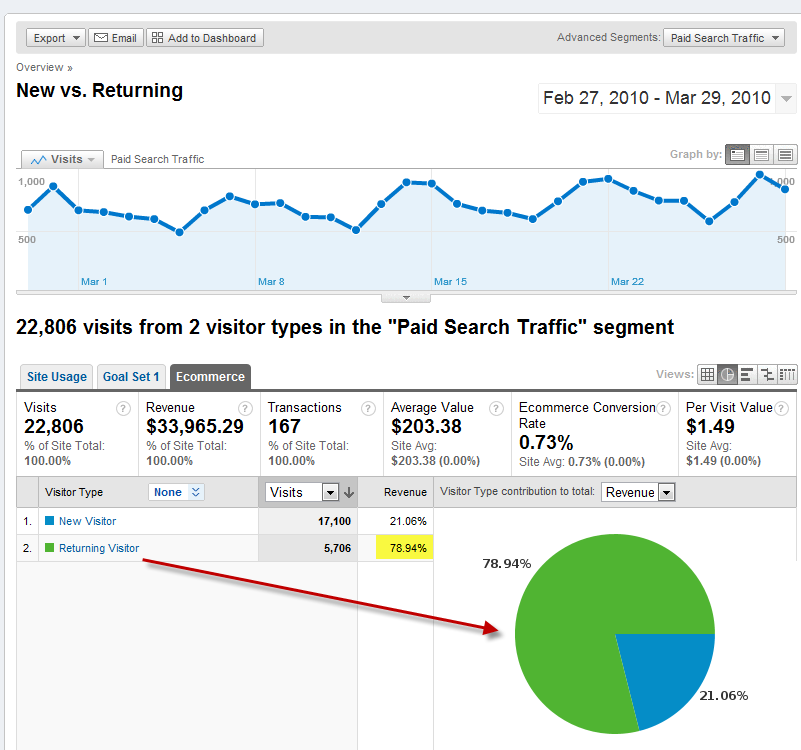

36. Don’t Surprise Your Regulars

When you run an A/B test, take time to plan how to segment your return and new visitors.

Return visitors know your website well, they have learned the experience of the site and have expectations.

If most of your revenue comes from return visitors think about testing your new variation on only new visitors.

Giles’s take

“Make sure to segment your tests and understand what the purpose of the test is.

If you are trying to improve the conversion rate of new visitors, improving your value proposition for example, then consider exposing only new visitors to the test.

You could implement a segmented test like this using Convert.com.“

37. Testing Too Many Variables

Don’t A/B test multiple variations at once unless they are significantly different, leave this type of design refinement to multivariate testing (which should come after a strong lift achieved through a large A/B test with a radical redesign approach)

Giles’s take

“Testing too many variations can often lead to wasted time.

Each variation will generally require a fairly large number of test subjects and will lengthen the time it takes to reach statistical significance when testing.

Also multiple variations usually point towards random testing, with either bad hypothesis or even worse no hypothesis.

Make sure your tests have a strong hypothesis that attempt to validate something significant about your customer, not just your webpage.

A/B testing is about improving customer understanding not micro conversion rates of single steps in a funnel.“

38. Avoid Sequential Testing

What if you just make a change to your website and then simply wait to see if the goal conversion rate increases or decreases?

Testing the conversion rate of Design A for one week, then swapping it and testing the conversion rate of Design B for one week as a comparison is not real testing.

This is actually called sequential testing.

Sequential testing does not have validity as it doesn’t refer to the same source of visitors.

The data set is different and therefore not comparable.

Giles’s take

“You conversion rate can change seasonally, by the day of the week or due to external factors such as weather or environment.

Therefore the only way to compare or test in a valid way is at the same time with the same traffic.

Never use sequential testing.

Only test using the same data set, the same traffic at the same time.

The only caveat to this rule is if you do not have enough traffic to test at all.

Then again, don’t run a sequential A/B test, simply make large changes and watch your monthly revenue and sales.

Your bank account can’t lie like misleading A/B test data can.“

Best Practices For Setting Up Your A/B Tests

Now we’ve learned a number of key points to keep in mind when planning your A/B tests, let’s dig deeper into best practices for setting up your A/B tests technically.

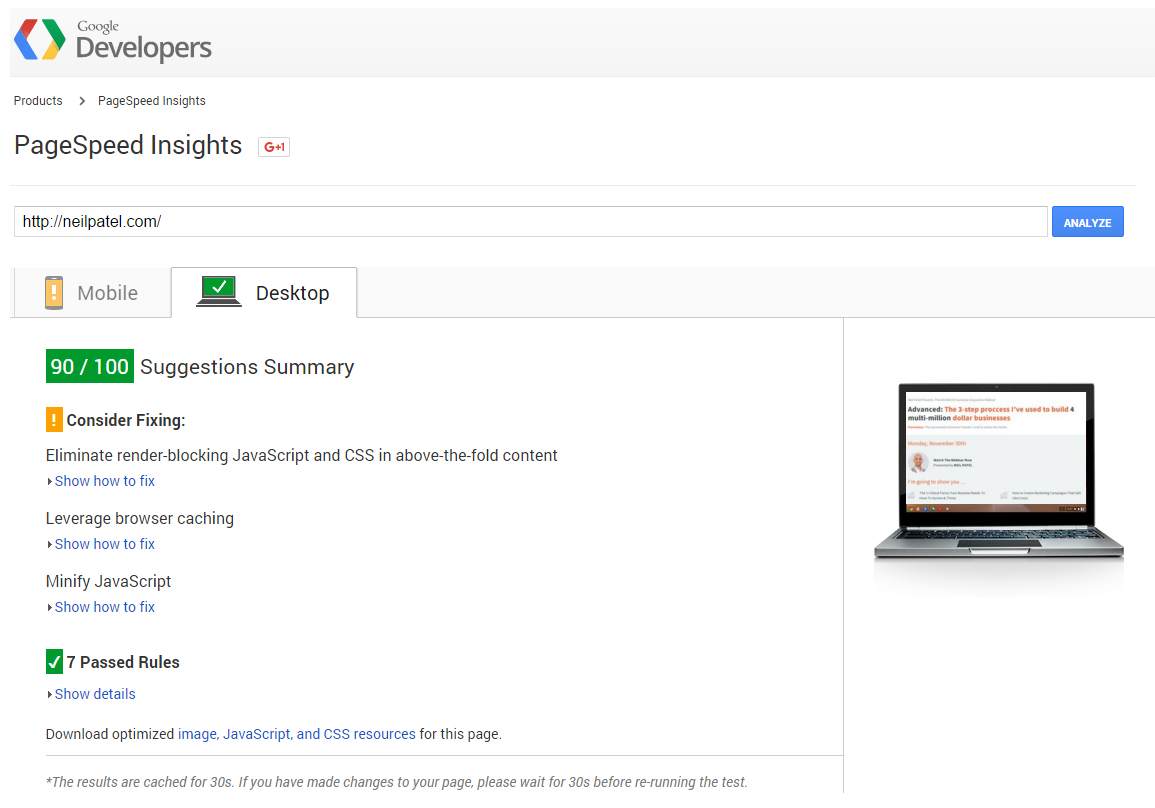

39. Run A Website Load Time Test Before & After You Install Your Javascript Snippet

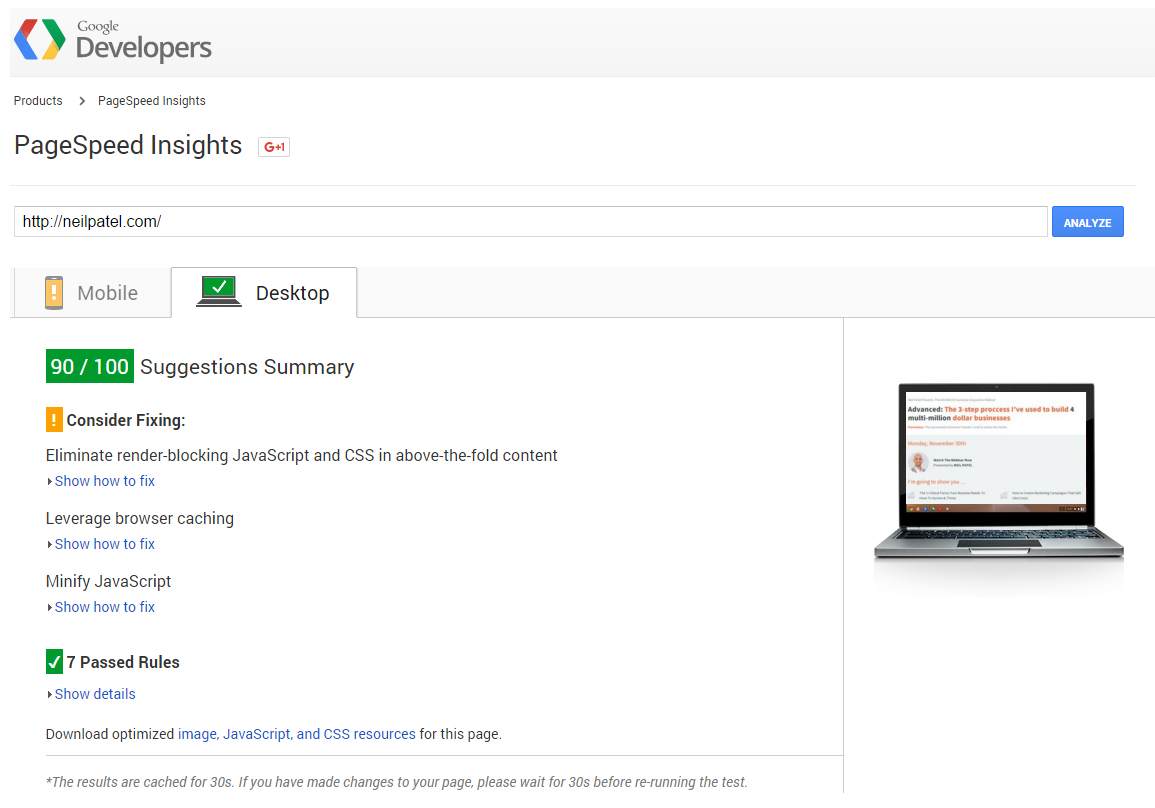

You all know that your website performance can affect your conversion rate.

If your page loads too slowly visitors will bounce and leave your site without converting.

This can be expensive, especially if you’re driving paid traffic to landing pages.

Ensure to conduct a page load speed test after you install your A/B testing software on your website.

As every javascript snippet added to your website will slow down the load time.

Giles’s take

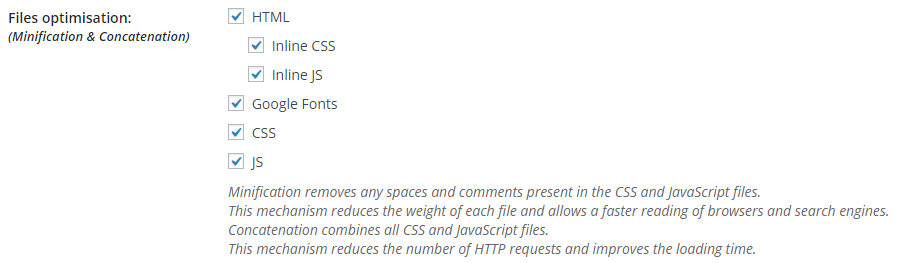

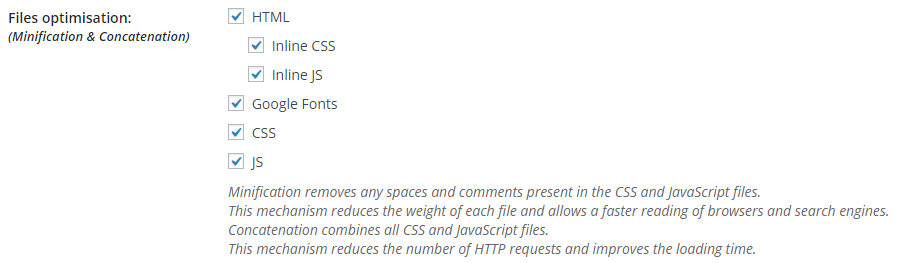

“Use Google Tag Manager to organise and optimize all your websites jQuery snippets in one place (great for non coders too).

You can also use caching tools or plugins, for WordPress I recommend WP Rocket, which allows you to minify and concatenate (reduce the weight and load time of) your css and javascript files.

If you’re using Optimizely, toggle jQuery in your project settings – Optimizely uses jQuery heavily to minimize the size of its code.

You’re almost definitely already running jQuery on your website and can disable it in Optimizely to speed up your load time.“

40. Watch For The Flicker Effect

The flicker effect is one downside to using client-side A/B testing tools, such as VWO or Optimizely.

The flicker effect is when for a brief moment you see the original version of the page before the test variation loads.

Also known as the FOOC or flash of original content, this flicker can be seen by humans who can identify images in as little as 13 milliseconds (The flicker lasting for up to 100 milliseconds).

Giles’s take

“Tools like VWO and Optimizely are great as they remove the need for developers and allow non technical people to implement tests quickly using WYSIWYG editors.

But they do have some down sides.

One of the major drawbacks of using client-side tools is that they effect your page load times, website speeds and can cause the flicker effect.

The best way to combat this is to speed up your website and make sure you embed your javascript snippet which loads the testing software in the right location on your page.

For Optimizely this is as high up as possible in the header.“

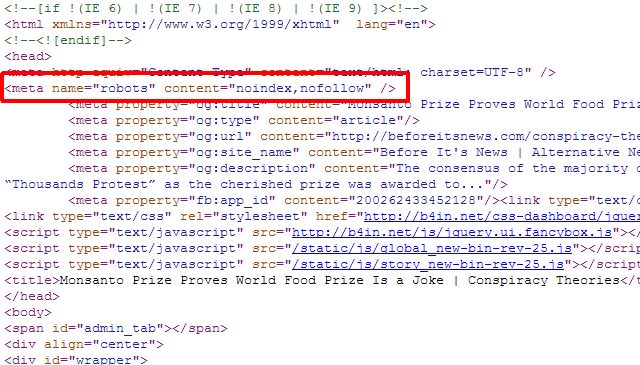

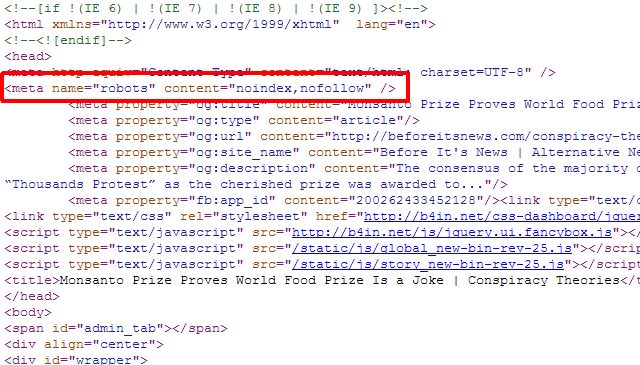

41. Make Sure You ‘No Index’ Your Variation Pages

If you are running an A/B test, ‘Control’ vs ‘Variation 1’.

You do not want ‘Variation 1’ be indexed by Google.

Because to date, the ‘Control’ is the best version of that page you’ve created.

And if ‘Variation 1’ is indexed, people could land on it directly from a Google search result.

Make sure then to ‘No Index’ (add this tag to the page) your variation pages so visitors cannot accidentally land on them directly from search results.

Giles’s take

“You can check if Google has indexed your variation pages using the following search query:

site:youdomainname.com/variation_one.html

If it shows up, it’s indexed.

A quick fix is to add this code to your robot.txt file:

User-Agent: Googlebot

Disallow: /variation_one.html

It’s also a good way to sneakily check to see what pages your competitors are testing! Simply look at their robot.txt file and see what pages are hidden from Google. They are probably pages they’re testing 😉

You can also add a canonical tag () to the control page to make sure Google knows this is the preferred content and ensure it doesn’t get marked down for duplicate content in search results.“

42. Make Sure Your A/B Testing Tool Doesn’t Cloak

Your website knows if a visitor is a Google spider or a human using a ‘user-agent’.

Some A/B testing tools filter out spiders from tests to get better test results.

Presenting different versions to the spider and normal visitors is called cloaking and can negatively affect your SEO rankings.

Giles’s take

“Ask your tool provider if they cloak content.

For the time it takes to ping a tweet to your provider, it is worth making sure your testing is not affecting your SEO standings.“

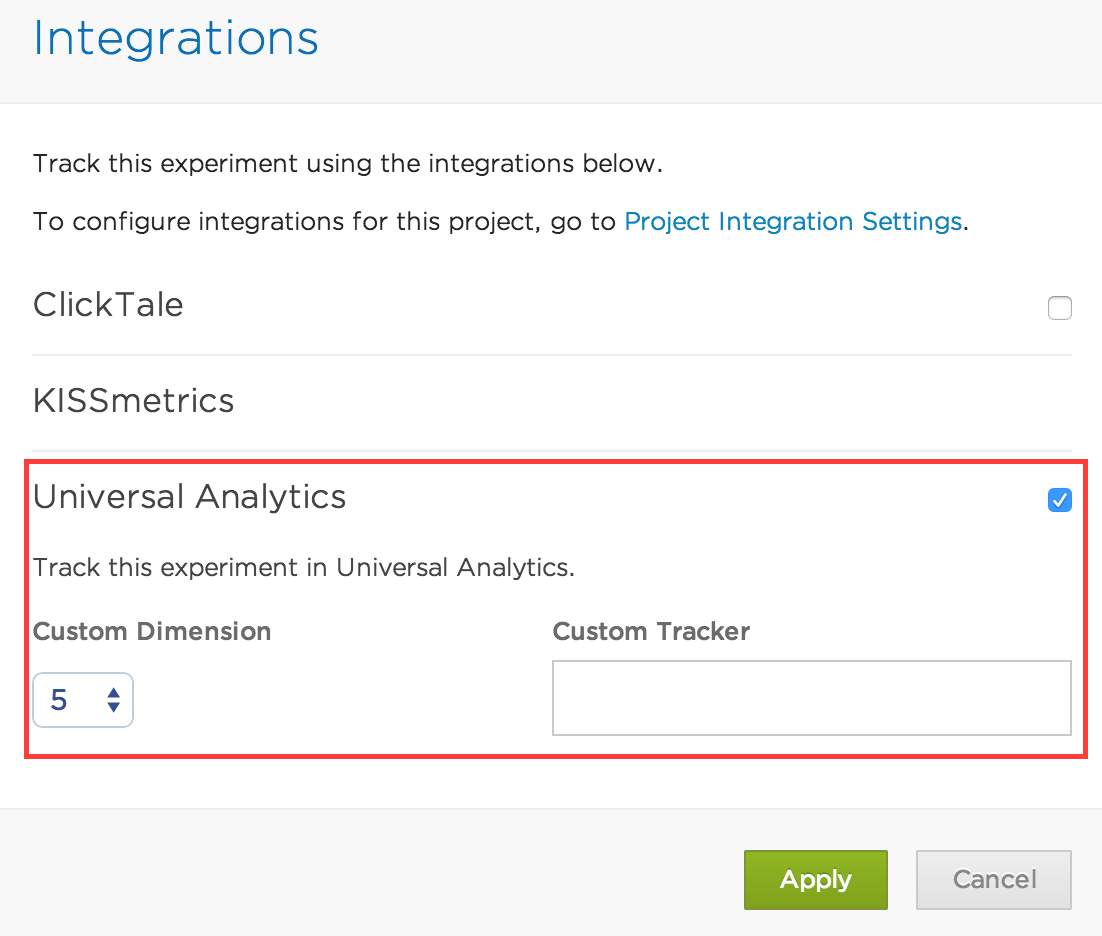

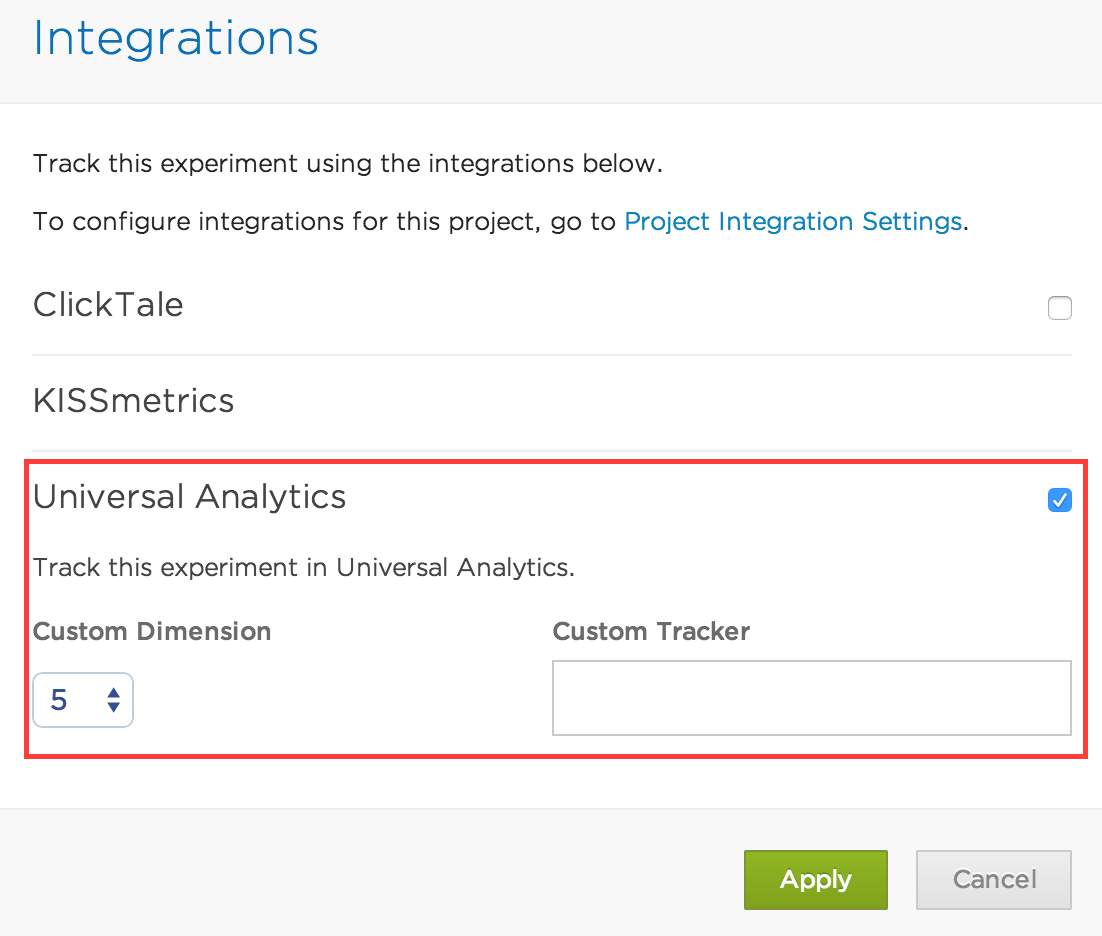

43. Integrate Your Test Data With Google Analytics

When it comes to testing tools the truth is there is no perfect solution.

This stuff is tricky to code and your data is never perfectly clean.

A great fail safe is to integrate your testing tool with Google Analytics.

That way you can cross check your testing tool results with GA and also simply look at the raw unique visitor data in GA and manually reconstruct your test data.

Giles’s take

“Just don’t believe the numbers as Craig Sullivan would say.

Cross check your test data with other sources like Google Analytics, bank accounts, GA goals, email list counts, Stripe reports.

Anyway you can confirm or disprove your numbers, do it.“

44. Don’t Break Your Site When Testing And Loose Money

Seems like a no brainer but it is easy to make small mistakes that you don’t realize affect your business.

In one of my A/B tests I redesigned an ecommerce homepage and managed to remove the phone number from the header for both the control and the variation.

The shop didn’t take orders over the phone so it probably didn’t affect the profits right?

Well…turns out the phone number was a huge trust factor, just by seeing it people recognized the company as being legit (as most competitors had no telephone contact) and removing sent conversions crashing down!

Giles’s take

“Obviously we are trying to increase profits with A/B testing as with most marketing initiatives.

So ensure you get the nitty gritty details right, as sometimes even small mistakes can cost big.

A great way to be proactive here is using checklists that evolve and become more sophisticated as you improve your craft. Grab a checklist of the best practices in this post here.“

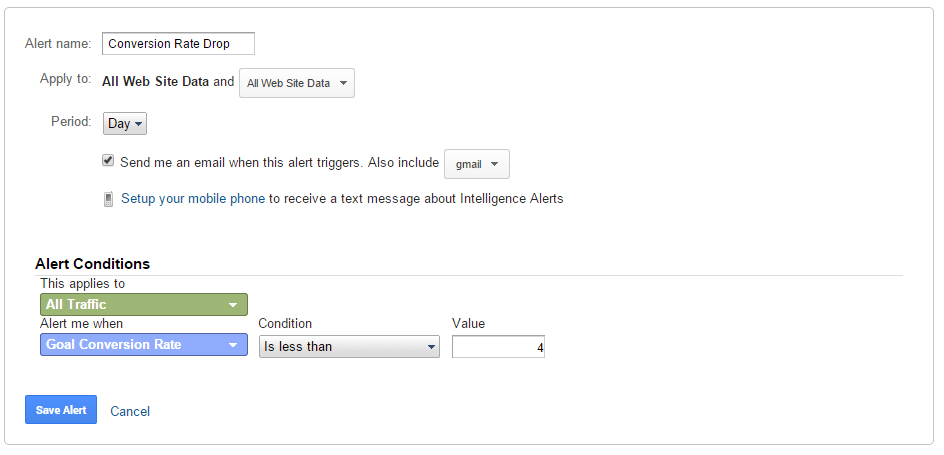

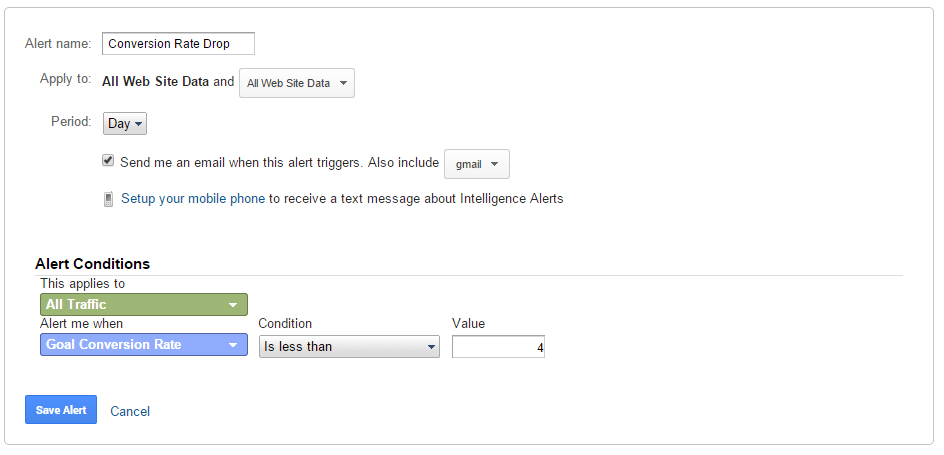

45. Setup Custom Alerts In Google Analytics To Track Key Conversion Points

A reactive method to dealing with human or technical error in A/B testing is setting up custom alerts.

Custom alerts or intelligence alerts are a way to keep yourself updated with the changes in your key conversion goals.

You can establish custom intelligence alerts for traffic, conversion rate, goal completions, analytics movement and Google referrals.

Giles’s take

“When running an A/B test, setup custom alerts for the key conversion goals you will be affecting incase your test causes technical issues.

That way you can fix the problem before it costs you too much money!

You can receive the alerts as emails and as SMS.

Here’s how to set one up:

Navigate in your GA account to:

Admin > Custom Alerts

Then click ‘New Alert’.

Fill in as suggested above to track a conversion rate drop below your standard percentage.

Pick specific goal conversions you are affecting in your A/B test for more appropriate alerts.“

Best Practices While Running Your A/B Tests

Now you’ve learned the ins and outs of settings up your A/B tests right, I want to take about just one point to remember while your tests are running.

It’s so important, it gets its own section!

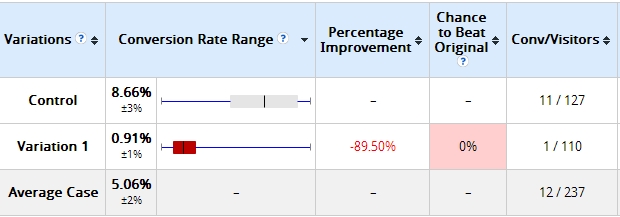

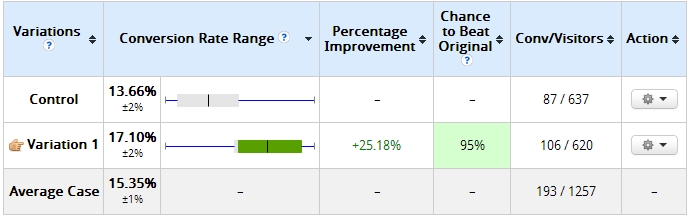

46. Don’t End Your Tests Too Soon

Let’s be honest, you’ve all stopped A/B tests too early.

And a big problem here is the testing tools themselves.

They can honestly be misleading and this is because it is in their best interest to be.

Peep Laja of ConversionXL points this out in this example.

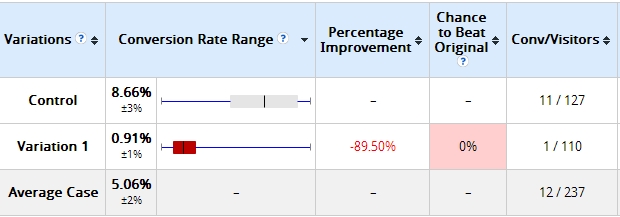

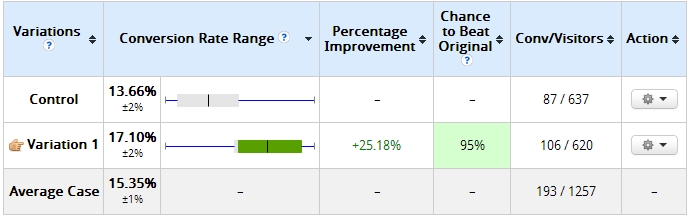

Here are the test results two days after the test had started:

The tool here suggests that Variation 1 has 0% chance of beating the original.

10 days later the results had turned around completely.

If he had stopped the test early according to the tool the results would have been completely wrong.

Giles’s take

“Stopping tests early is one of most common ways people get testing wrong.

Here are some rules for when to stop your tests:

- The tests have been running for a complete week (monday to sunday) or better still a complete month or business cycle

- You pre calculated the sample size needed for each variation and met your criteria

- Each branch or variation in the test has had 250-350 conversions each

- Statistical significance is, at least, 95%“

Best Practices For Interpreting Your A/B Test Results

Once you’ve completed your tests as above it’s time to crunch those numbers.

You’re not home and dry yet, so brush up on these best practices below and make sure you’re not falling at the last A/B testing hurdle.

47. Don’t Interpret A Random Fluctuation As A Winning Test

Random fluctuation, also known as a statistical fluctuation is when you get random changes to data sets that are not related to the tested changes.

Imagine you tossed a coin ten times and heads came up 80% of the time.

This might lead you to believe that heads comes up the majority of the time.

Not true, because the sample size (10) is so small it is easy to get misleading test results.

Giles’s take

“Always use a sample size calculator when planning your A/B tests.

Make sure to calculate how many subjects will be needed for each branch (or variation) of your A/B test first.

This will not only make sure you reduce your chances of random fluctuations, but will also allow you to estimate the time needed to run the test.“

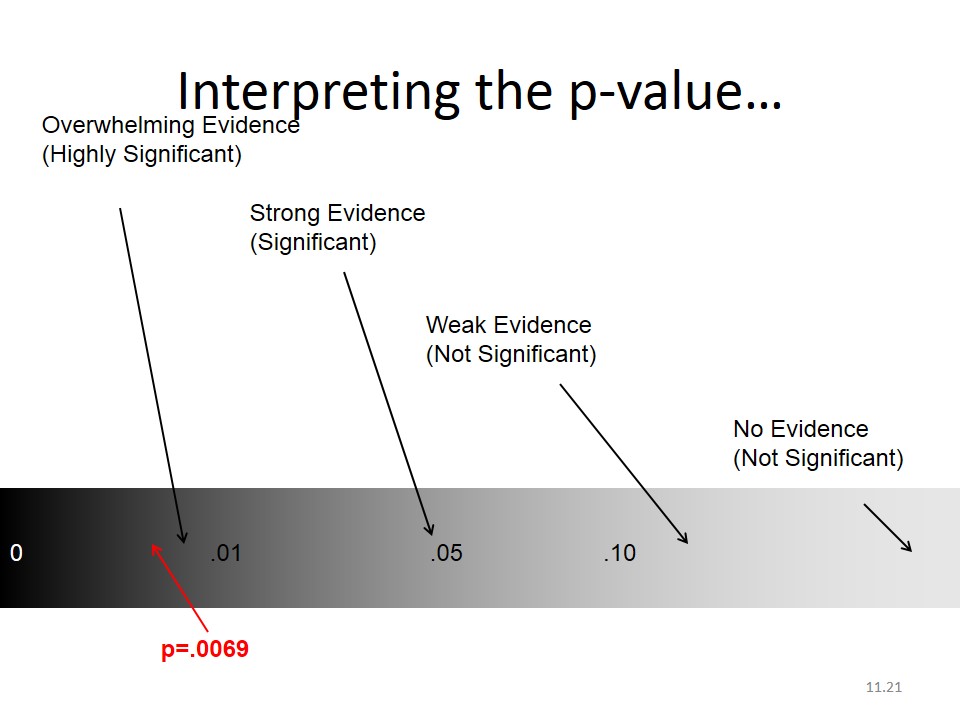

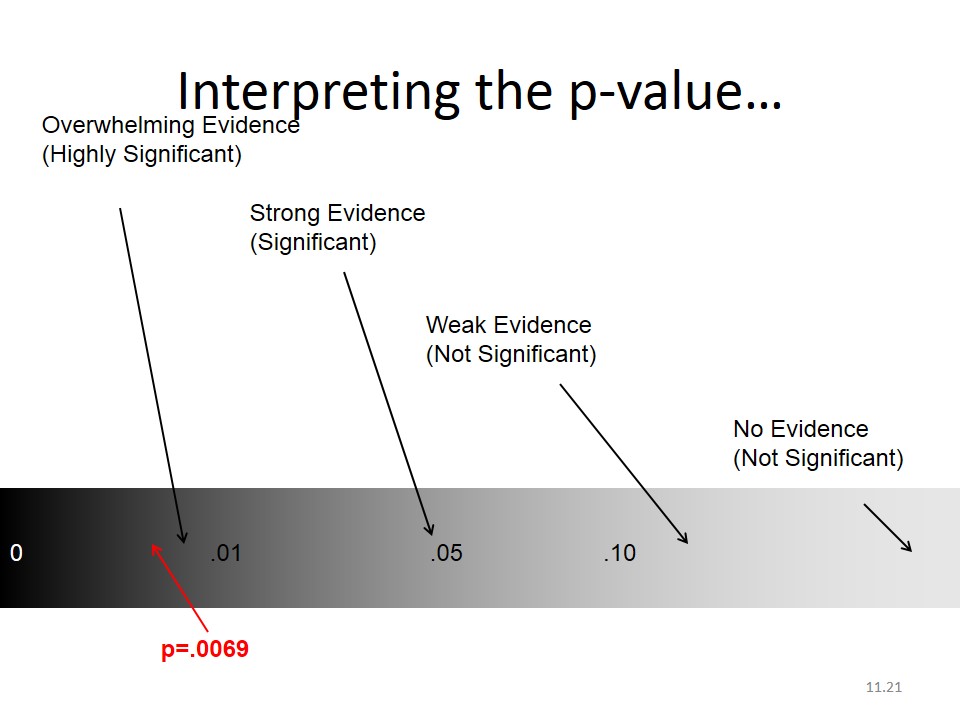

48. Understanding What P Value’s Really Mean

Ok, so what are P Values?

P Values are used to determine statistical significance in a hypothesis test.

They tell you how well the data supports that the null hypothesis is true.

Basically it measures the compatibility between the data you collected and the null hypothesis.

Giles’s take

“High P values: Your sample data points to a true null.

Low P values: Your sample data does not point to a true null.

A low P value should be interpreted as evidence to reject your null hypothesis for the testing population.“

49. Stop Focusing On CVR (Average Conversion Rate)

What data do you look at when interpreting your A/B test results?

Some marketers look at sample size, conversions and confidence levels.

The more diligent will consider statistical power and test length.

But few, no I’d go as far as to say nearly none, consider the lead-to-MQL or the number of marketing qualified leads that are generated.

Most people look at the conversion rate of all their traffic, not the conversion rate of their target traffic.

And without segmenting you can get really misleading test results, end up making changes that mean you end up with more unqualified leads and a decrease in conversion rate among your target audience.

This means you could be optimizing for more conversions, but of less qualified leads, therefore optimizing for less potential revenue and profits…not good.

Giles’s take

“Running A/B tests and interpreting the results without segmenting the data can lead to wasted time and resources.

Imagine you ran a test, Control vs Variation A, Variation A wins with 150% lift in CVR. Winning test…right?

What if your non-target audience converted better with Variation A, while your target audience saw no change. You’d be optimizing for more unqualified leads.

If your target audience hates Variation A but your non-target audience loves it you’re losing customers as well as getting more unqualified leads!

Make sure to segment your traffic and understand how conversion rate changes affects lead quality.

Measure the value of the leads on the back end for your business, measure the profits not the CVR.“

50. Regression To The Mean

In the beginning of some tests you see crazy fluctuations in conversion rate data.

And you ask yourself, what is happening here!?

Regression to the mean simply says things will even out over time.

So don’t go jumping to conclusions when running tests by stopping them early.

Giles’s take

“A good example is to imagine a researcher. They give a large group of people a test and select the best performing 5%.

These people would likely score worse on average if tested again.

In the same way, the worse performing 5% would likely score better if tested again.

In both cases the extremes of distribution are likely to ‘regress to the mean’ because of random variations in results.

This is known as regression to the mean.

Aka.

“The phenomenon that if a variable is extreme on its first measurement, it will tend to be closer to the average on its second measurement.”

So take care when calling tests early, based only on reaching significance, it’s possible you’re seeing a false positive. And there’s a good chance your ‘winner’ will regress to the mean.“

51. Novelty Effect

The novelty effect in A/B testing is exactly what is sounds like.

You see a conversion rate change for your new variation.

A lift! Boom!

Wrong.

The truth is, you are only seeing a lift because the variation is new.

New and shiny, nothing more.

Giles’s take

“To truly test if your new variation is causing a conversion rate change you can segment your new and returning visitors.

If it is simply the novelty effect, the new variation will see a lift with new visitors.

When returning visitors get used to the new variation, it should see a lift with them also.

You can learn more about testing for the novelty effect in this Adobe article.“

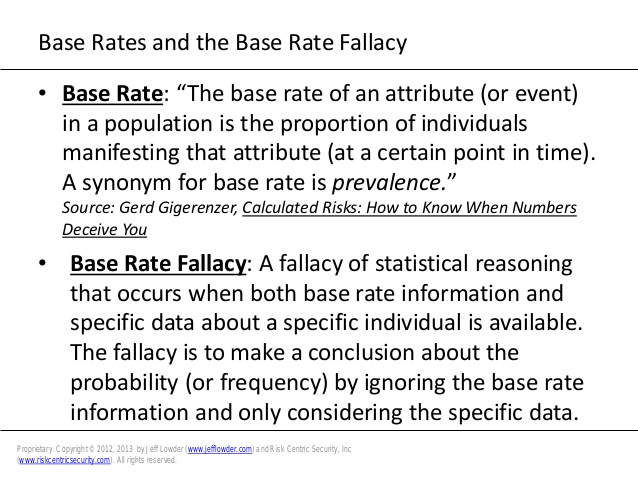

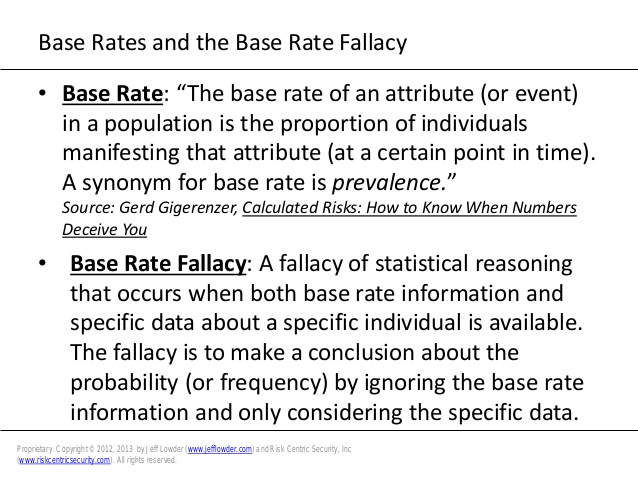

52. Base Rate Fallacy

Humans have a funny way of only hearing and seeing what they want to.

Base rate fallacy or base rate neglect is when people ignore statistical information in favor of using irrelevant information, that they incorrectly believe is relevant, to make a decision.

This is an irrational behavior.

Giles’s take

“Here’s a good example from Logically Fallacious.

Example: Only 6% of applicants make it into this school, but my son is brilliant! They are certainly going to accept him!

Explanation: Statistically speaking, there is a 6% chance they will accept him. The school is for brilliant kids, so the fact that her son is brilliant is a necessary condition to be part of the 6% who do make it.“

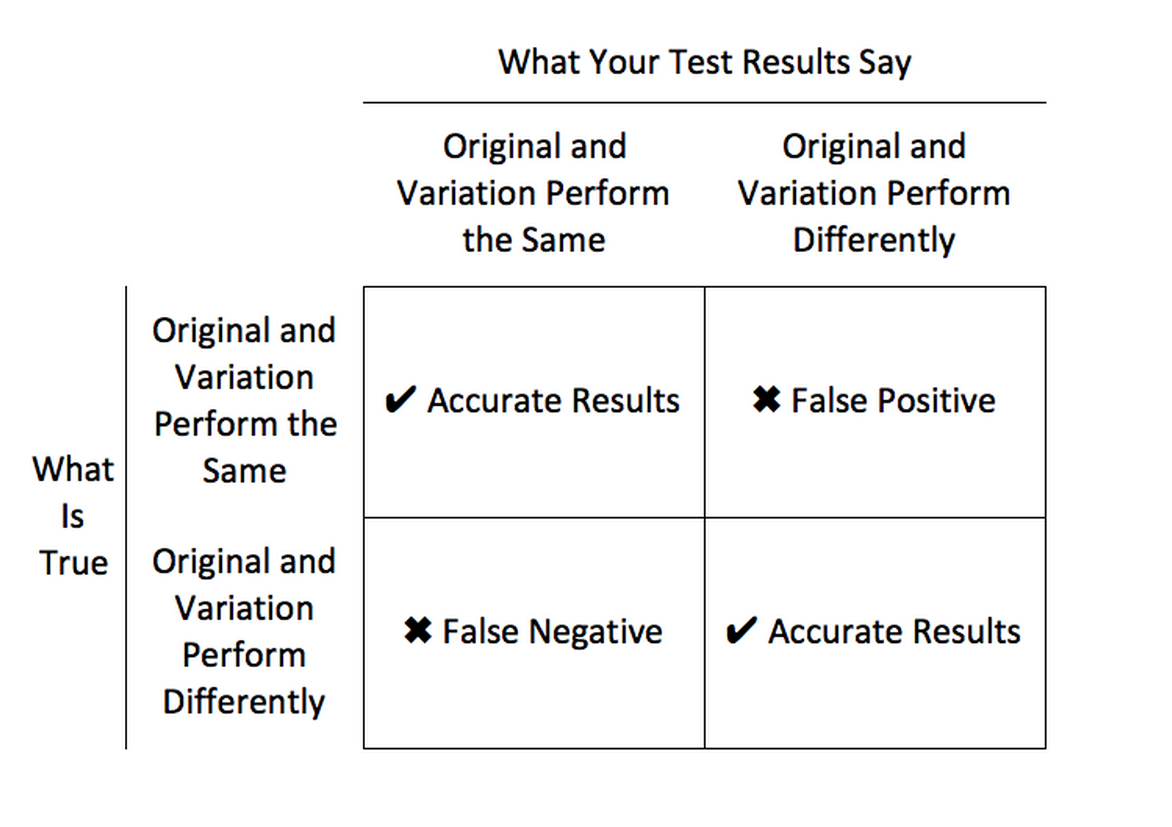

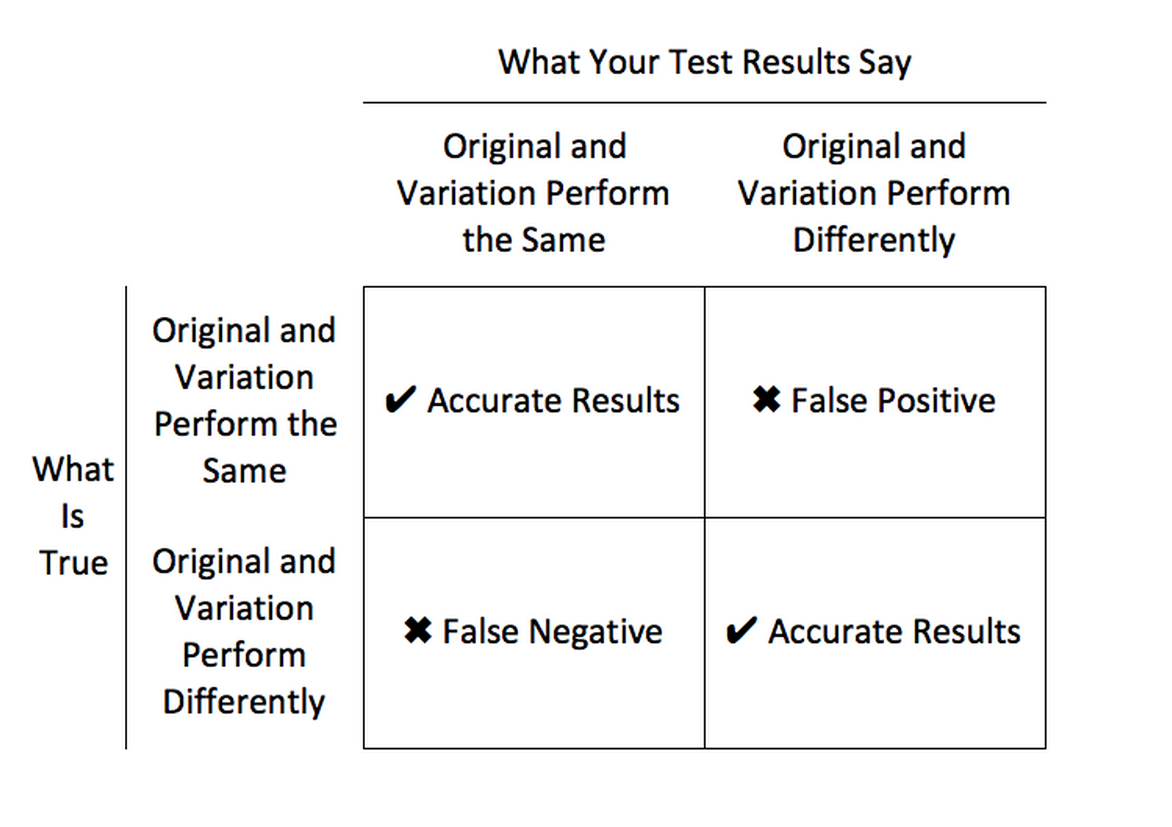

53. Pay Attention To False Positives (type I errors)

A false positive is when your A/B test results suggest something is validated when it is not.

These normally occur when many variations are tested at once.

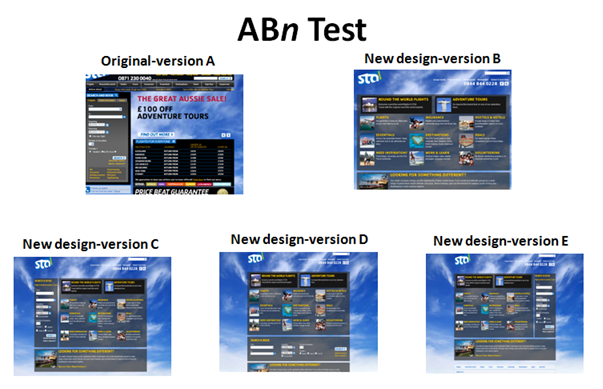

Start off with large changes and just run A/B tests not A/B/n tests.

It’s easy to assume you are right especially when the data is leading.

Paul from LeadOne advises:

“Always look for ways to prove yourself wrong, assumptions are the killer of great conversion rates.”

Giles’s take

“Testing too many variations at once increasing your chances of getting a false positive.

Focus on A/B testing first and then use multivariate testing for refinement later.“

54. Don’t Ignore Small Gains

Don’t think small wins are worthless in A/B testing.

Small incremental, month over month improvements, are more expectable and definitely worth having.

The compounding effect of conversion increases over a year can be astounding.

Giles’s take

“Don’t get wrapped up in hitting a home run.

Shoot for 15% conversion rate increase quarterly or 5% month over month.

Now work out what that would mean for your business compounded over the next year right now.

…Not too shabby ey?!

Remember, small gains count and can compound over time.“

55. Run Important Tests Twice To Double Check Your Results

Just because a test wins, doesn’t mean the money is in the bank.

William Harris, VP of marketing & growth at Dollarhobbyz.com suggests:

“Double check your results. It’s so common to have a test come back statistically significant in favor of a result, only to have that completely negated by a follow up test. Run the test. Then run it again. If you get conflicting results, run it a third time. The worst thing you can do is run a test and make a hasty change to your website that causes you to lose money.”

A/B Testing Best Practices Checklist

Remember A/B testing is not a silver bullet, it’s just one step in a complete CRO process.

It’s important to always be testing and to run the right tests first. Focus on reducing the resource cost to opportunity ratio.

For those of you who are hell bent on getting testing right, I’ve created an A/B testing best practices checklist you can download below.

Let me know in the comments if I missed any best practices you follow when testing your websites changes…